Chapter 9: Data Analysis – Hypothesis Testing, Estimating Sample Size, and Modeling

This chapter provides the foundational concepts and tools for analyzing data commonly seen in the transportation profession. The concepts include hypothesis testing, assessing the adequacy of the sample sizes, and estimating the least square model fit for the data. These applications are useful in collecting and analyzing travel speed data, conducting before-after comparisons, and studying the association between variables, e.g., travel speed and congestion as measured by traffic density on the road.

Learning Objectives

At the end of the chapter, the reader should be able to do the following:

- Estimate the required sample size for testing.

- Use specific significance tests including, z-test, t-test (one and two samples), chi-squared test.

- Compute corresponding p-value for the tests.

- Compute and interpret simple linear regression between two variables.

- Estimate a least-squares fit of data.

- Find confidence intervals for parameter estimates.

- Use of spreadsheet tools (e.g., MS Excel) and basic programming (e.g., R or SPSS) to calculate complex and repetitive mathematical problems similar to earthwork estimates (cut, fill, area, etc.), trip generation and distribution, and linear optimization.

- Use of spreadsheet tools (e.g., MS Excel) and basic programming (e.g., R or SPSS) to create relevant graphs and charts from data points.

- Identify topics in the introductory transportation engineering courses that build on the concepts discussed in this chapter.

Central Limit Theorem

In this section, you will learn about the the central limit theorem by reading each description along with watching the videos. Also, short problems to check your understanding are included.

Central Limit Theorem

The Central Limit theorem for Sample Means

The sampling distribution is a theoretical distribution. It is created by taking many samples of size n from a population. Each sample mean is then treated like a single observation of this new distribution, the sampling distribution. The genius of thinking this way is that it recognizes that when we sample, we are creating an observation and that observation must come from some particular distribution. The Central Limit Theorem answers the question: from what distribution dis a sample mean come? If this is discovered, then we can treat a sample mean just like any other observation and calculate probabilities about what values it might take on. We have effectively moved from the world of statistics where we know only what we have from the sample to the world of probability where we know the distribution from which the same mean came and the parameters of that distribution.

The reasons that one samples a population are obvious. The time and expense of checking every invoice to determine its validity or every shipment to see if it contains all the items may well exceed the cost of errors in billing or shipping. For some products, sampling would require destroying them, called destructive sampling. One such example is measuring the ability of a metal to withstand saltwater corrosion for parts on ocean going vessels.

Sampling thus raises an important question; just which sample was drawn. Even if the sample were randomly drawn, there are theoretically an almost infinite number of samples. With just 100 items, there are more than 75 million unique samples of size five that can be drawn. If six are in the sample, the number of possible samples increases to just more than one billion. Of the 75 million possible samples, then, which one did you get? If there is variation in the items to be sampled, there will be variation in the samples. One could draw an “unlucky” sample and make very wrong conclusions concerning the population. This recognition that any sample we draw is really only one from a distribution of samples provides us with what is probably the single most important theorem is statistics: the Central Limit Theorem. Without the Central Limit Theorem, it would be impossible to proceed to inferential statistics from simple probability theory. In its most basic form, the Central Limit Theorem states that regardless of the underlying probability density function of the population data, the theoretical distribution of the means of samples from the population will be normally distributed. In essence, this says that the mean of a sample should be treated like an observation drawn from a normal distribution. The Central Limit Theorem only holds if the sample size is “large enough” which has been shown to be only 30 observations or more.

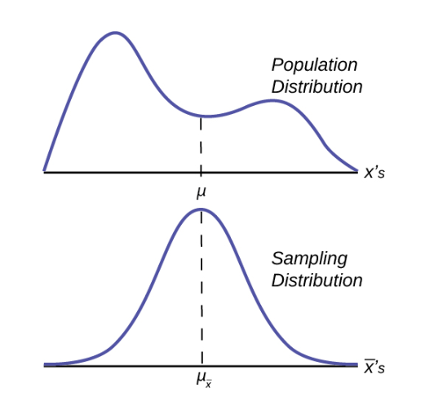

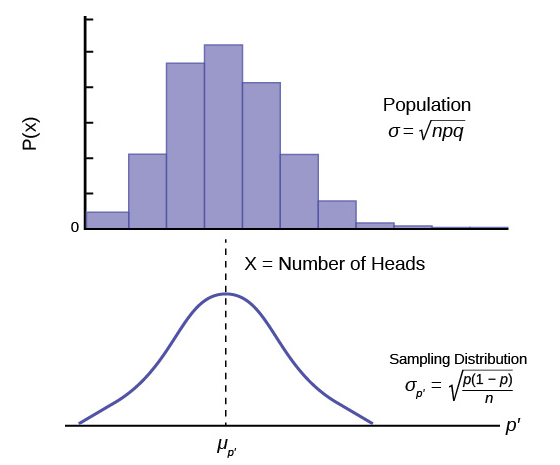

Figure 1 graphically displays this very important proposition.

Notice that the horizontal axis in the top panel is labeled X. These are the individual observations of the population. This is the unknown distribution of the population values. The graph is purposefully drawn all squiggly to show that it does not matter just how odd ball it really is. Remember, we will never know what this distribution looks like, or its mean or standard deviation for that matter.

The horizontal axis in the bottom panel is labeled ![]() . This is the theoretical distribution called the sampling distribution of the means. Each observation on this distribution is a sample mean. All these sample means were calculated from individual samples with the same sample size. The theoretical sampling distribution contains all of the sample mean values from all the possible samples that could have been taken from the population. Of course, no one would ever actually take all of these samples, but if they did this is how they would look. And the Central Limit Theorem says that they will be normally distributed.

. This is the theoretical distribution called the sampling distribution of the means. Each observation on this distribution is a sample mean. All these sample means were calculated from individual samples with the same sample size. The theoretical sampling distribution contains all of the sample mean values from all the possible samples that could have been taken from the population. Of course, no one would ever actually take all of these samples, but if they did this is how they would look. And the Central Limit Theorem says that they will be normally distributed.

The Central Limit Theorem goes even further and tells us the mean and standard deviation of this theoretical distribution.

| Parameter | Population distribution | Sample | Sampling distribution of |

|---|---|---|---|

| Mean | |||

| Standard deviation | s |

The practical significant of The Central Limit Theorem is that now we can compute probabilities for drawing a sample mean, ![]() , in just the same way as we did for drawing specific observations,

, in just the same way as we did for drawing specific observations, ![]() s, when we knew the population mean and standard deviation and that the population data were normally distributed. The standardizing formula has to be amended to recognize that the mean and standard deviation of the sampling distribution, sometimes, called the standard error of the mean, are different from those of the population distribution, but otherwise nothing has changed. The new standardizing formula is

s, when we knew the population mean and standard deviation and that the population data were normally distributed. The standardizing formula has to be amended to recognize that the mean and standard deviation of the sampling distribution, sometimes, called the standard error of the mean, are different from those of the population distribution, but otherwise nothing has changed. The new standardizing formula is

![]()

Notice that ![]()

![]() in the first formula has been changed to simply

in the first formula has been changed to simply ![]() in the second version. The reason is that mathematically it can be shown that the expected value of

in the second version. The reason is that mathematically it can be shown that the expected value of ![]()

![]() is equal to

is equal to ![]() .

.

Sampling Distribution of the Sample Mean

Sampling Distribution of the Sample Mean (Part 2)

Sampling Distributions: Sampling Distribution of the Mean

Using the Central Limit Theorem

Law of Large Numbers

The law of large numbers says that if you take samples of larger and larger size from any population, then the mean of the sampling distribution, ![]()

![]() tends to get close and close to the true population mean,

tends to get close and close to the true population mean, ![]() . From the Central Limit Theorem, we know that as n gets larger and larger, the sample means follow a normal distribution. The larger n gets, the smaller the standard deviation of the sampling distribution gets. (Remember that the standard deviation for sampling distribution of

. From the Central Limit Theorem, we know that as n gets larger and larger, the sample means follow a normal distribution. The larger n gets, the smaller the standard deviation of the sampling distribution gets. (Remember that the standard deviation for sampling distribution of ![]() . This means that the sample mean

. This means that the sample mean ![]() must be closer to the population mean

must be closer to the population mean ![]() as n increases. We can say that

as n increases. We can say that ![]() is the value that the sample means approach as n gets larger. The Central Limit Theorem illustrates the law of large numbers.

is the value that the sample means approach as n gets larger. The Central Limit Theorem illustrates the law of large numbers.

Indeed, there are two critical issues that flow from the Central Limit Theorem and the application of the Law of Large numbers to it. These are listed below.

-

- The probability density function of the sampling distribution of means is normally distributed regardless of the underlying distribution of the population observations and

- Standard deviation of the sampling distribution decreases as the size of the samples that were used to calculate the means for the sampling distribution increases.

Taking these in order. It would seem counterintuitive that the population may have any distribution and the distribution of means coming from it would be normally distributed. With the use of computers, experiments can be simulated that show the process by which the sampling distribution changes as the sample size is increased. These simulations show visually the results of the mathematical proof of the Central Limit Theorem.

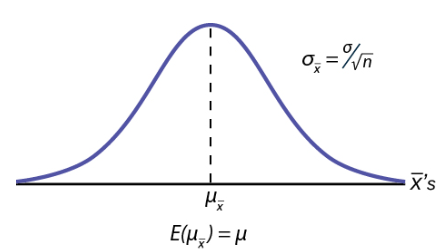

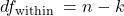

Figure 2 shows a sampling distribution. The mean has been marked on the horizontal axis of the ![]() and the standard deviation has been written to the right above the distribution. Notice that the standard deviation of the sampling distribution is the original standard deviation of the population, divided by the sample size. We have already seen that as the sample size increases the sampling distribution becomes closer and closer to the normal distribution. As this happens, the standard deviation of the sampling distribution changes in another way; the standard deviation decreases as n increases. At very large n, the standard deviation of the sampling distribution becomes very small and at infinity it collapses on top of the population mean. This is what it means that the expected value of

and the standard deviation has been written to the right above the distribution. Notice that the standard deviation of the sampling distribution is the original standard deviation of the population, divided by the sample size. We have already seen that as the sample size increases the sampling distribution becomes closer and closer to the normal distribution. As this happens, the standard deviation of the sampling distribution changes in another way; the standard deviation decreases as n increases. At very large n, the standard deviation of the sampling distribution becomes very small and at infinity it collapses on top of the population mean. This is what it means that the expected value of ![]() is the population mean,

is the population mean, ![]() .

.

At non-extreme values of n, this relationship between the standard deviation of the sampling distribution and the sample size plays a very important part in our ability to estimate the parameters in which we are interested.

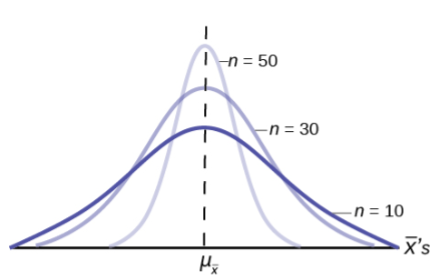

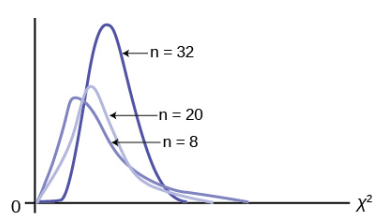

Figure 3 shows three sampling distributions. The only change that was made is the sample size that was used to get the sample means for each distribution. As the sample size increases, n goes from 10 to 30 to 50, the standard deviations of the respective sampling distributions decrease because the sample size is in the denominator of the standard deviations of the sampling distributions.

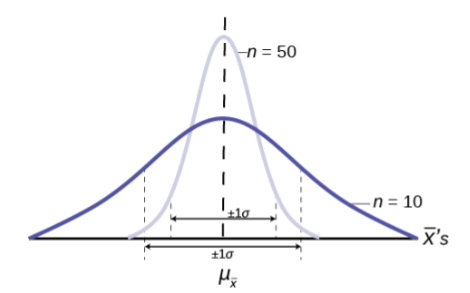

The implications for this are very important. Figure 4 shows the effect of the sample size on the confidence we will have in our estimates. These are two sampling distributions from the same population. One sampling distribution was created with samples of size 10 and the other with samples of size 50. All other things constant, the sampling distribution with sample size 50 has a smaller standard deviation that causes the graph to be higher and narrower. The important effect of this is that for the same probability of one standard deviation from the mean, this distribution covers much less of a range of possible values than the other distribution. One standard deviation is marked on the ![]() axis for each distribution. This is shown by the two arrows that are plus or minus one standard deviation for each distribution. If the probability that the true mean is one standard deviation away from the mean, then for the sampling distribution with the smaller sample size, the possible range of values is much greater. A simple question is, would you rather have a sample mean from the narrow, tight distribution, or the flat, wide distribution as the estimate of the population mean? Your answer tells us why people intuitively will always choose data from a large sample rather than a small sample. The sample mean they are getting is coming from a more compact distribution.

axis for each distribution. This is shown by the two arrows that are plus or minus one standard deviation for each distribution. If the probability that the true mean is one standard deviation away from the mean, then for the sampling distribution with the smaller sample size, the possible range of values is much greater. A simple question is, would you rather have a sample mean from the narrow, tight distribution, or the flat, wide distribution as the estimate of the population mean? Your answer tells us why people intuitively will always choose data from a large sample rather than a small sample. The sample mean they are getting is coming from a more compact distribution.

The Central Limit Theorem for Proportions

The Central Limit Theorem tells us that the point estimate for the sample mean, ![]() , comes from a normal distribution of

, comes from a normal distribution of ![]() . This theoretical distribution is called the sampling distribution of

. This theoretical distribution is called the sampling distribution of ![]() . We now investigate the sampling distribution for another important parameter we wish to estimate, p from the binomial probability density function.

. We now investigate the sampling distribution for another important parameter we wish to estimate, p from the binomial probability density function.

If the random variable is discrete, such as for categorical data, then the parameter we wish to estimate is the population proportion. This is, of course, the probability of drawing a success in any one random draw. Unlike the case just discussed for a continuous random variable where we did not know the population distribution of X’s, here we actually know the underlying probability density function for these data; it is the binomial. The random variable is X = the number of successes and the parameter we wish to know is p, the probability of drawing a success which is of course the proportion of successes in the population. The question at issue is: from what distribution was the sample proportion, ![]() drawn? The sample size is n and X is the number of successes found in that sample. This is a parallel question that was just answered by the Central Limit Theorem: from what distribution was the sample mean,

drawn? The sample size is n and X is the number of successes found in that sample. This is a parallel question that was just answered by the Central Limit Theorem: from what distribution was the sample mean, ![]() drawn? We saw that once we knew that the distribution was the Normal distribution then we were able to create confidence intervals for the population parameter,

drawn? We saw that once we knew that the distribution was the Normal distribution then we were able to create confidence intervals for the population parameter, ![]() . We will also use this same information to test hypotheses about the population mean later. We wish now to be able to develop confidence intervals for the population parameter “p” from the binomial probability density function.

. We will also use this same information to test hypotheses about the population mean later. We wish now to be able to develop confidence intervals for the population parameter “p” from the binomial probability density function.

In order to find the distribution from which sample proportions come we need to develop the sampling distribution of sample proportions just as we did for sample means. So again, imagine that we randomly sample say 50 people and ask them if they support the new school bond issue. From this we find a sample proportion, p’, and graph it on the axis of p’s. We do this again and again etc., etc. until we have the theoretical distribution of p’s. Some sample proportions will show high favorability toward the bond issue and others will show low favorability because random sampling will reflect the variation of views within the population. What we have done can be seen in Figure 5. The top panel is the population distributions of probabilities for each possible value of the random variable X. While we do not know what the specific distribution looks like because we do not know p, the population parameter, we do know that it must look something like this. In reality, we do not know either the mean or the standard deviation of this population distribution, the same difficulty we faced when analyzing the X’s previously.

Figure 5 places the mean on the distribution of population probabilities as ![]() but of course we do not actually know the population mean because we do not know the population probability of success, p. Below the distribution of the population values is the sampling distribution of p’s. Again, the Central Limit Theorem tells us that this distribution is normally distributed just like the case of the sampling distribution for

but of course we do not actually know the population mean because we do not know the population probability of success, p. Below the distribution of the population values is the sampling distribution of p’s. Again, the Central Limit Theorem tells us that this distribution is normally distributed just like the case of the sampling distribution for ![]() . This sampling distribution also has a mean, the mean of the p’s, and a standard deviation,

. This sampling distribution also has a mean, the mean of the p’s, and a standard deviation, ![]() .

.

Importantly, in the case of the analysis of the distribution of sample means, the Central Limit Theorem told us the expected value of the mean of the sample means in the sampling distribution, and the standard deviation of the sampling distribution. Again, the Central Limit Theorem provides this information for the sampling distribution for proportions. The answers are:

-

- The expected value of the mean of sampling distribution of sample proportions,

, is the population proportion, p.

, is the population proportion, p. - The standard deviation of the sampling distribution of sample proportions,

, is the population standard deviation divided by the square root of the sample size, n.

, is the population standard deviation divided by the square root of the sample size, n.

- The expected value of the mean of sampling distribution of sample proportions,

Both these conclusions are the same as we found for the sampling distribution for sample means. However, in this case, because mean and standard deviation of the binomial distribution both rely upon p, the formula for the standard deviation of the sampling distribution requires algebraic manipulation to be useful. The standard deviation of the sampling distribution for proportions is thus:

![]()

| Parameter | Population distribution | Sample | Sampling distribution of p‘s |

|---|---|---|---|

| Mean | |||

| Standard deviation |

Table 2 summarizes these results and shows the relationship between the population, sample, and sampling distribution.

Reviewing the formula for the standard deviation of the sampling distribution for proportions we see that as n increases the standard deviation decreases. This is the same observation we made for the standard deviation for the sampling distribution for means. Again, as the sample size increases, the point estimate for either ![]() is found to come from a distribution with a narrower and narrower distribution. We concluded that with a given level of probability, the range from which the point estimate comes is smaller as the sample size, n, increases.

is found to come from a distribution with a narrower and narrower distribution. We concluded that with a given level of probability, the range from which the point estimate comes is smaller as the sample size, n, increases.

Find Confidence Intervals for Parameter Estimates

In this section, you will learn how to find and estimate confidence intervals by reading each description along with watching the videos included. Also, short problems to check your understanding are included.

Confidence Intervals & Estimation: Point Estimates Explained

Introduction to Confidence Intervals

Suppose you were trying to determine the mean rent of a two-bedroom apartment in your town. You might look in the classified section of the newspaper, write down several rents listed, and average them together. You would have obtained a point estimate of the true mean. If you are trying to determine the percentage of times you make a basket when shooting a basketball, you might count the number of shots you make and divide that by the number of shots you attempted. In this case, you would have obtained a point estimate for the true proportion the parameter p in the binomial probability density function.

We use sample data to make generalizations about an unknown population. This part of statistics is called inferential statistics. The sample data help us to make an estimate of a population parameter. We realize that the point estimate is most likely not the exact value of the population parameter, but close to it. After calculating point estimates, we construct interval estimates, called confidence intervals. What statistics provides us beyond a simple average, or point estimate, is an estimate to which we can attach a probability of accuracy, what we will call a confidence level. We make inferences with a known level of probability.

If you worked in the marketing department of an entertainment company, you might be interested in the mean number of songs a consumer downloads a month from iTunes. If so, you could conduct a survey and calculate the sample mean, ![]() , and the sample standard deviation, s. You would use

, and the sample standard deviation, s. You would use ![]() to estimate the population mean and s to estimate the population standard deviation. The same mean,

to estimate the population mean and s to estimate the population standard deviation. The same mean, ![]() , is the point estimate for the population mean,

, is the point estimate for the population mean, ![]() . The sample standard deviation, s, is the point estimate for the population standard deviation,

. The sample standard deviation, s, is the point estimate for the population standard deviation, ![]() .

.

![]() and s are each called a statistic.

and s are each called a statistic.

A confidence interval is another type of estimate but, instead of being just one number, it is an interval of numbers. The interval of numbers is a range of values calculated from a given set of sample data. The confidence interval is likely to include the unknown population parameter.

Suppose for the iTunes examples, we do not know the population mean ![]() , but we do know that the population standard deviation is

, but we do know that the population standard deviation is ![]() and our sample size is 100. Then, by the Central Limit Theorem, the standard deviation of the sampling distribution of the sample means is

and our sample size is 100. Then, by the Central Limit Theorem, the standard deviation of the sampling distribution of the sample means is ![]() .

.

The Empirical Rule, which applies to the normal distribution, says that in approximately 95% of the samples, the same mean, ![]() , will be within two standard deviations of the population mean

, will be within two standard deviations of the population mean ![]() . For our iTunes example, two standard deviations is

. For our iTunes example, two standard deviations is ![]() . The sample mean

. The sample mean ![]() is likely to be within 0.2 units of

is likely to be within 0.2 units of ![]() .

.

Because ![]() is within 0.2 units of

is within 0.2 units of ![]() which is unknown, then

which is unknown, then ![]() is likely to be within 0.2 units of

is likely to be within 0.2 units of ![]() with 95% probability. The population mean

with 95% probability. The population mean ![]() is contained in an interval whose lower number is calculated by taking the sample mean and subtracting two standard deviations

is contained in an interval whose lower number is calculated by taking the sample mean and subtracting two standard deviations ![]() and whose upper number is calculated by taking the sample mean and adding two standard deviations. In other words,

and whose upper number is calculated by taking the sample mean and adding two standard deviations. In other words, ![]() is between

is between ![]() in 955 of all the samples.

in 955 of all the samples.

For the iTunes example, suppose that a sample produced a sample mean ![]() . Then with 95% probability the unknown population mean

. Then with 95% probability the unknown population mean ![]() is between

is between

![]()

We say that we are 95% confident that the unknown population mean number of songs downloaded from iTunes per month is between 1.8 and 2.2. The 95% confidence interval is (1.8, 2.2). Please note that we talked in terms of 95% confidence using the empirical rule. The empirical rule for two standard deviations is only approximately 95% of the probability under the normal distribution. To be precise, two standard deviations under a normal distribution is actually 95.44% of the probability. To calculate the exact 95% confidence level, we would use 1.96 standard deviations.

The 95% confidence interval implies two possibilities. Either the interval (1.8, 2.2) contains the true mean 𝜇, or our sample produce an ![]() that is not within 0.2 units of the true mean 𝜇. The first possibility happens for 95% of well-chosen samples. It is important to remember that the second possibility happens for 5% of samples, even though correct procedures are followed.

that is not within 0.2 units of the true mean 𝜇. The first possibility happens for 95% of well-chosen samples. It is important to remember that the second possibility happens for 5% of samples, even though correct procedures are followed.

Remember that a confidence interval is created for an unknown population parameter like the population mean, 𝜇.

For the confidence interval for a mean the formula would be:

![]()

Or written another way as:

![]()

Where ![]() s is the sample mean.

s is the sample mean. ![]() a is determined by the level of confidence desired by the analyst, and

a is determined by the level of confidence desired by the analyst, and ![]() is the standard deviation of the sampling distribution for means given to us by the Central Limit Theorem.

is the standard deviation of the sampling distribution for means given to us by the Central Limit Theorem.

A Confidence Interval for a Population Standard Deviation, Known or Large Sample Size

A confidence interval for a population mean, when the population standard deviation is known based on the conclusion of the Central Limit Theorem that the sampling distribution of the sample means follow an approximately normal distribution.

Calculating the Confidence Interval

Consider the standardizing formula for the sampling distribution developed in the discussion of the Central Limit Theorem:

![]()

Notice that ![]() is substituted for

is substituted for ![]() – because we know that the expected value of

– because we know that the expected value of ![]() – is from the Central Limit Theorem and

– is from the Central Limit Theorem and ![]() – is replaced with

– is replaced with ![]() , also from the Central Limit Theorem.

, also from the Central Limit Theorem.

In this formula we know ![]() and n, the sample size. (In actuality we do not know the population standard deviation, but we do have a point estimate for it, s, from the sample we took. More on this later.) What we do not know is

and n, the sample size. (In actuality we do not know the population standard deviation, but we do have a point estimate for it, s, from the sample we took. More on this later.) What we do not know is ![]() We can solve for either one of these in terms of the other. Solving for 𝜇 in terms of

We can solve for either one of these in terms of the other. Solving for 𝜇 in terms of ![]() gives:

gives:

![]()

Remembering that the Central Limit Theorem tells us that the distribution of the ![]() , the sampling distribution for means, is normal, and that the normal distribution is symmetrical, we can rearrange terms thus:

, the sampling distribution for means, is normal, and that the normal distribution is symmetrical, we can rearrange terms thus:

![]()

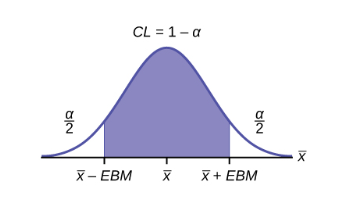

This is the formula for a confidence interval for the mean of a population.

Notice that ![]() has been substituted for

has been substituted for ![]() in this equation. This is where the statistician must make a choice. The analyst must decide the level of confidence they wish to impose on the confidence interval.

in this equation. This is where the statistician must make a choice. The analyst must decide the level of confidence they wish to impose on the confidence interval. ![]() is the probability that the interval will not contain the true population mean. The confidence level is defined as

is the probability that the interval will not contain the true population mean. The confidence level is defined as ![]() is the number of standard deviations

is the number of standard deviations ![]() lies from the mean with a certain probability. If we chose,

lies from the mean with a certain probability. If we chose, ![]() we are asking for the 95% confidence interval because we are setting the probability that the true mean lies within the range at 0.95. If we set

we are asking for the 95% confidence interval because we are setting the probability that the true mean lies within the range at 0.95. If we set ![]() at 1.64 we are asking for the 90% confidence interval because we have set the probability at 0.90. These numbers can be verified by consulting the Standard Normal table. Divide either 0.95 or 0.90 in half and find that probability inside the body of the table. Then read on the top and left margins the number of standard deviations it takes to get this level of probability.

at 1.64 we are asking for the 90% confidence interval because we have set the probability at 0.90. These numbers can be verified by consulting the Standard Normal table. Divide either 0.95 or 0.90 in half and find that probability inside the body of the table. Then read on the top and left margins the number of standard deviations it takes to get this level of probability.

| Confidence Level | blank cell |

|---|---|

| 0.80 | 1.28 |

| 0.90 | 1.645 |

| 0.95 | 1.96 |

| 0.99 | 2.58 |

In reality, we can set whatever level of confidence we desire simply by changing the ![]() value in the formula. It is the analyst’s choice. Common convention in Economics and most social sciences sets confidence intervals at either 90, 95, or 99 percent levels. Levels less than 90% are considered of little value. The level of confidence of a particular interval estimate is called

value in the formula. It is the analyst’s choice. Common convention in Economics and most social sciences sets confidence intervals at either 90, 95, or 99 percent levels. Levels less than 90% are considered of little value. The level of confidence of a particular interval estimate is called ![]() .

.

Let us say we know that the actual population mean number of iTunes downloads is 2.1. The true population mean falls within the range of the 95% confidence interval. There is absolutely nothing to guarantee that this will happen. Further, if the true mean falls outside of the interval, we will never know it. We must always remember that we will never ever know the true mean. Statistics simply allows us, with a given level of probability (confidence), to say that the true mean is within the range calculated.

Changing the Confidence Level or Sample Size

Here again is the formula for a confidence interval for an unknown population mean assuming we know the population standard deviation:

![]()

It is clear that the confidence interval is driven by two things, the chosen level of confidence, ![]() , and the standard deviation of the sampling distribution. The standard deviation of the sampling distribution is further affected by two things, the standard deviation of the population and sample size we chose for our data. Here we wish to examine the effects of each of the choices we have made on the calculated confidence interval, the confidence level, and the sample size.

, and the standard deviation of the sampling distribution. The standard deviation of the sampling distribution is further affected by two things, the standard deviation of the population and sample size we chose for our data. Here we wish to examine the effects of each of the choices we have made on the calculated confidence interval, the confidence level, and the sample size.

For a moment we should ask just what we desire in a confidence interval. Our goal was to estimate the population mean from a sample. We have forsaken the hope that we will ever find the true population mean, and population standard deviation for that matter, for any case except where we have an extremely small population and the cost of gathering the data of interest is very small. In all other cases we must rely on samples. With the Central Limit Theorem, we have the tools to provide a meaningful confidence interval with a given level of confidence, meaning a known probability of being wrong. By meaningful confidence interval we mean one that is useful. Imagine that you are asked for a confidence interval for the ages of your classmates. You have taken a sample and find a mean of 19.8 years. You wish to be very confident, so you report an interval between 9.8 years and 29.8 years. This interval would certainly contain the true population mean and have a very high confidence level. However, it hardly qualifies as meaningful. The very best confidence interval is narrow while having high confidence. There is a natural tension between these two goals. The higher the level of confidence the wider the confidence interval as the case of the students’ ages above. We can see this tension in the equation for the confidence interval.

![]()

The confidence interval will increase in width as ![]() increases,

increases, ![]() increases as the level of confidence increases. There is a tradeoff between the level of confidence and the width of the interval. Now let us look at the formula again and we see that the sample size also plays an important role in the width of the confidence interval. The sample size, n, shows up in the denominator of the standard deviation of the sampling distribution. As the sample size increases, the standard deviation of the sampling distribution decreases and thus the width of the confidence interval, while holding constant the level of confidence. Again, we see the importance of having large samples for our analysis although we then face a second constraint, the cost of gathering data.

increases as the level of confidence increases. There is a tradeoff between the level of confidence and the width of the interval. Now let us look at the formula again and we see that the sample size also plays an important role in the width of the confidence interval. The sample size, n, shows up in the denominator of the standard deviation of the sampling distribution. As the sample size increases, the standard deviation of the sampling distribution decreases and thus the width of the confidence interval, while holding constant the level of confidence. Again, we see the importance of having large samples for our analysis although we then face a second constraint, the cost of gathering data.

Calculating the Confidence Interval: An Alternative Approach

Another way to approach confidence intervals is through the use of something called the Error Bound (margin of error). The Error Bound gets its name from the recognition that it provides the boundary of the interval derived from the standard error of the sampling distribution. In the equations above it is seen that the interval is simply the estimated mean, sample mean, plus or minus something. That something is the Error Bound and is driven by the probability we desire to maintain in our estimate, ![]() , times the standard deviation of the sampling distribution. The Error Bound for a mean is given the name, Error Bound Mean, or EBM (or margin of error, M.O.E.).

, times the standard deviation of the sampling distribution. The Error Bound for a mean is given the name, Error Bound Mean, or EBM (or margin of error, M.O.E.).

To construct a confidence interval for a single unknown population, mean 𝜇, where the population standard deviation is known, we need ![]() as an estimate for 𝜇 and we need the margin of error. Here, the margin of error (EBM) is called the error bound for a population mean. The sample mean

as an estimate for 𝜇 and we need the margin of error. Here, the margin of error (EBM) is called the error bound for a population mean. The sample mean ![]() is the point estimate of the unknown population mean 𝜇.

is the point estimate of the unknown population mean 𝜇.

The confidence interval estimate will have the form:

(Point estimate – error bound, point estimate + error bound) or, in symbols,

![]()

The mathematical formula for this confidence interval is:

![]()

The margin of error (EBM) depends on the confidence level (abbreviated CL). The confidence level is often considered the probability that the calculated confidence interval estimate will contain the true population parameter. However, it is more accurate to state that the confidence level is the percent of confidence intervals that contain the true population parameter when repeated samples are taken. Most often, it is the choice of the person constructing the confidence interval to choose a confidence level of 90% or higher because that person wants to be reasonably certain of his or her conclusions.

There is another probability called alpha ![]() is related to the confidence level, CL.

is related to the confidence level, CL. ![]() is the probability that the interval does not contain the unknown population parameter. Mathematically,

is the probability that the interval does not contain the unknown population parameter. Mathematically, ![]() .

.

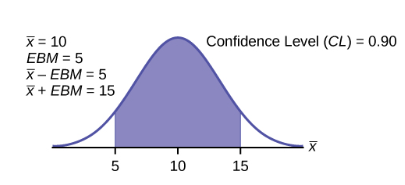

A confidence interval for a population mean with a known standard deviation is based on the fact that the sampling distribution of the sample means follow an approximately normal distribution. Suppose that our sample has a mean of ![]() and we have constructed the 90% confidence interval (5, 15) where EMB = 5.

and we have constructed the 90% confidence interval (5, 15) where EMB = 5.

To get a 90% confidence interval, we must include the central 90% of the probability of the normal distribution. If we include the central 90%, we leave out a total of ![]() in both tails, or 5% in each tail, of the normal distribution.

in both tails, or 5% in each tail, of the normal distribution.

To capture the central 90%, we must go out 1.645 standard deviations on either side of the calculated sample mean. The value 1.645 is the z-score from a standard normal probability distribution that puts an area of 0.90 in the center, an area of 0.05 in the far-left tail, and an area of 0.05 in the far-right tail.

It is important that the standard deviation used must be appropriate for the parameter we are estimating, so in this section we need to use the standard deviation that applies to the sampling distribution for mean which we studied with the Central Limit Theorem and is, ![]() .

.

Calculating the Confidence Interval Using EBM

To construct a confidence interval, estimate for an unknown population mean, we need data from a random sample. The steps to construct and interpret the confidence interval are listed below.

- Calculate the sample mean

from the sample data. Remember, in this section we know the population standard deviation

from the sample data. Remember, in this section we know the population standard deviation  .

. - Find the z-score from the standard normal table that corresponds to the confidence level desired.

- Calculate the error bound EBM.

- Construct the confidence interval.

- Write a sentence that interprets the estimate in the context of the situation in the problem.

Finding the z-score for the Stated Confidence Level

When we know the population standard deviation ![]() , we use a standard normal distribution to calculate the error bound EBM and construct the confidence interval. We need to find the value of z that puts an area equal to the confidence level (in decimal form) in the middle of the standard normal distribution

, we use a standard normal distribution to calculate the error bound EBM and construct the confidence interval. We need to find the value of z that puts an area equal to the confidence level (in decimal form) in the middle of the standard normal distribution ![]() .

.

The confidence level, CL, is the area in the middle of the standard normal distribution. ![]() is the area that is split equally between the two tails. Each of the tails contains an area equal to

is the area that is split equally between the two tails. Each of the tails contains an area equal to ![]() .

.

The z-score that has an area to the right of ![]() is denoted by

is denoted by ![]()

For example, when ![]()

The area to the right of ![]() is

is ![]() .

.

![]() , using a standard normal probability table. We will see later that we can use a different probability table, the Student's t-distribution, for finding the number of standard deviations of commonly used levels of confidence.

, using a standard normal probability table. We will see later that we can use a different probability table, the Student's t-distribution, for finding the number of standard deviations of commonly used levels of confidence.

Calculating the Error Bound (EBM)

The error bound formula for an unknown population mean ![]() when the population standard deviation

when the population standard deviation ![]() is known as

is known as

![]()

Constructing the Confidence Interval

The confidence interval estimate has the format ![]() or the formula:

or the formula:

![]()

The graph gives a picture of the entire situation.

![]()

Confidence Interval for Mean: 1 Sample Z Test (Using Formula)

Check Your Understanding: Confidence Interval for Mean

A Confidence Interval for a Population Standard Deviation Unknown, Small Sample Case

In practice, we rarely known the population standard deviation. In the past, when the sample size was large, this did not present a problem to statisticians. They used the sample standard deviation s as an estimate for ![]() and proceeded as before to calculate a confidence interval with close enough results. Statisticians ran into problems when the sample size was small. A small sample size caused inaccuracies in the confidence interval.

and proceeded as before to calculate a confidence interval with close enough results. Statisticians ran into problems when the sample size was small. A small sample size caused inaccuracies in the confidence interval.

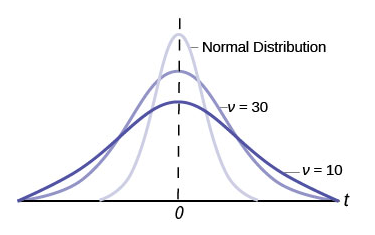

William S. Goset (1876-1937) of the Guinness brewery in Dublin, Ireland ran into this problem. His experiments with hops and barely produced very few samples. Just replacing ![]() with s did not produce accurate results when he tried to calculate a confidence interval. He realized that he could not use a normal distribution for the calculation; he found that the actual distribution depends on the sample size. This problem led him to “discover” what is called the Student’s t-distribution. The name comes from the fact that Gosset wrote under the pen name “A Student.”

with s did not produce accurate results when he tried to calculate a confidence interval. He realized that he could not use a normal distribution for the calculation; he found that the actual distribution depends on the sample size. This problem led him to “discover” what is called the Student’s t-distribution. The name comes from the fact that Gosset wrote under the pen name “A Student.”

Up until the mid-1970s, some statisticians used the normal distribution approximation for large sample sizes and used the Student’s t-distribution only for sample sizes of at most 30 observations.

If you draw a simple random sample of size n from a population with mean 𝜇 and unknown population standard deviation ![]() and calculate the t-score

and calculate the t-score ![]() , then the t-scores follow a Student’s t-distribution with n – 1 degrees of freedom. The t-score has the same interpretation as the z-score. It measures how far in standard deviation units

, then the t-scores follow a Student’s t-distribution with n – 1 degrees of freedom. The t-score has the same interpretation as the z-score. It measures how far in standard deviation units ![]() is from its mean

is from its mean ![]() . For each sample size n, there is a different Student’s t-distribution.

. For each sample size n, there is a different Student’s t-distribution.

The degrees of freedom, n – 1, come from the calculation of the sample standard deviation s. Remember when we first calculated a sample standard deviation, we divided the sum of the squared deviations by ![]() , but we used n deviations

, but we used n deviations ![]() to calculate s. Because the sum of the deviations is zero, we can find the last deviation once we know the other

to calculate s. Because the sum of the deviations is zero, we can find the last deviation once we know the other ![]() deviations. The other

deviations. The other ![]() deviations can change or vary freely. We call the number

deviations can change or vary freely. We call the number ![]() the degrees of freedom (df) in recognition that one is lost in the calculations. The effect of losing a degree of freedom is that the t-value increases, and the confidence interval increases in width.

the degrees of freedom (df) in recognition that one is lost in the calculations. The effect of losing a degree of freedom is that the t-value increases, and the confidence interval increases in width.

Properties of the Student’s t-distribution

- The graph for the Student’s t-distribution is similar to the standard normal curve and at infinite degrees of freedom it is the normal distribution. You can confirm this by reading the bottom line at infinite degrees of freedom for a familiar level of confidence, e.g., at column 0.05, 95% level of confidence, we find the t-value of 1.96 at infinite degrees of freedom.

- The mean for the Student’s t-distribution is zero and the distribution is symmetric about zero, again like the standard normal distribution.

- The Student’s t-distribution has more probability in its tails than the standard normal distribution because the spread of the t-distribution is greater than the spread of the standard normal. So, the graph of the Student’s t-distribution will be thicker in the tails and shorter in the center than the graph of the standard normal distribution.

- The exact shape of the Student’s t-distribution depends on the degrees of freedom. As the degrees of freedom increases, the graph of Student’s t-distribution becomes more like the graph of the standard normal distribution.

- The underlying population of individual observations is assumed to be normally distributed with unknown population mean

and unknown population standard deviation 𝜎. This assumption comes from the Central Limit Theorem because the individual observations in this case are the

and unknown population standard deviation 𝜎. This assumption comes from the Central Limit Theorem because the individual observations in this case are the  of the sample distribution. The size of the underlying population is generally not relevant unless it is very small. If it is normal, then the assumption is met and does not need discussion.

of the sample distribution. The size of the underlying population is generally not relevant unless it is very small. If it is normal, then the assumption is met and does not need discussion.

A probability table for the Student’s t-distribution is used to calculate t-values at various commonly used levels of confidence. The table gives t-scores that correspond to the confidence level (column) and degrees of freedom (row). When using a t-table, note that some tables are formatted to show the confidence level in the column headings, while the column headings in some tables may show only corresponding area in one or both tails. Notice that at the bottom the table will show the t-value for infinite degrees of freedom. Mathematically, as the degrees of freedom increase, the t-distribution approaches the standard normal distribution. You can find familiar Z-values by looking in the relevant alpha column and reading value in the last row.

A Student’s t-table gives t-scores given the degrees of freedom and the right-tailed probability.

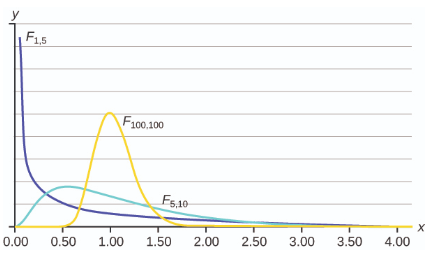

The Student’s t-distribution has one of the most desirable properties of the normal: it is symmetrical. What the Student’s t-distribution does is spread out the horizontal axis, so it takes a larger number of standard deviations to capture the same amount of probability. In reality there are an infinite number of Student’s t-distributions, one for each adjustment to the sample size. As the sample size increases, the Student’s t-distribution become more and more like the normal distribution. When the sample size reaches 30 the normal distribution is usually substituted for the Student’s t because they are so much alike. This relationship between the Student’s t-distribution and the normal distribution is shown in Figure 8.

Here is the confidence interval for the mean for cases when the sample size is smaller than 30 and we do not know the population standard deviation, ![]() :

:

![]()

Here the point estimate of the population standard deviation, s has been substituted for the population standard deviation, ![]() , and

, and ![]() has been substituted for

has been substituted for ![]() . The Greek letter

. The Greek letter ![]() (pronounced nu) is places in the general formula in recognition that there are many Student

(pronounced nu) is places in the general formula in recognition that there are many Student ![]() distributions, one for each sample size.

distributions, one for each sample size. ![]() is the symbol for the degrees of freedom of the distribution and depends on the size of the sample. Often df is used to abbreviate degrees of freedom. For this type of problem, the degrees of freedom is

is the symbol for the degrees of freedom of the distribution and depends on the size of the sample. Often df is used to abbreviate degrees of freedom. For this type of problem, the degrees of freedom is ![]() , where n is the sample size. To look up a probability in the Student’s t table we have to know the degrees of freedom in the problem.

, where n is the sample size. To look up a probability in the Student’s t table we have to know the degrees of freedom in the problem.

Confidence Intervals: Using the t Distribution

Check Your Understanding: Confidence Intervals

A Confidence Interval for a Population Proportion

During an election year, we see articles in the newspaper that state confidence intervals in terms of proportions or percentages. For example, a poll for a particular candidate running for president might show that the candidate has 40% of the vote within three percentage points (if the sample is large enough). Often, election polls are calculated with 95% confidence, so, the pollsters would be 95% confident that the true proportion of voters who favored the candidate would be between 0.37 and 0.43.

The procedure to find the confidence interval for a population proportion is similar to that for the population mean, but the formulas are a bit different although conceptually identical. While the formulas are different, they are based upon the same mathematical foundation given to us by the Central Limit Theorem. Because of this we will see the same basic format using the same three pieces of information: the sample value of the parameter in question, the standard deviation of the relevant sampling distribution, and the number of standard deviations we need to have the confidence in our estimate that we desire.

How do you know you are dealing with a proportion problem? First, the underlying distribution has a binary random variable and therefore is a binomial distribution. (There is no mention of a mean or average). If X is a binomial random variable, then ![]() where n is the number of trials and p is the probability of a success. To form a sample proportion, take X, the random variable for the number of successes and divide it by n, the number of trials (or the sample size). The random variable

where n is the number of trials and p is the probability of a success. To form a sample proportion, take X, the random variable for the number of successes and divide it by n, the number of trials (or the sample size). The random variable ![]() (read “P Prime”) is the sample proportion,

(read “P Prime”) is the sample proportion,

![]()

(Sometimes the random variable is denoted as ![]() , read “P hat.”)

, read “P hat.”)

![]() = the estimated proportion of successes or sample proportion of successes (p’ is a point estimate for p, the true population proportion, and thus q is the probability of a failure in any one trial.)

= the estimated proportion of successes or sample proportion of successes (p’ is a point estimate for p, the true population proportion, and thus q is the probability of a failure in any one trial.)

x = the number of successes in the sample

n = the size of the sample

The formula for the confidence interval for a population proportion follows the same format as that for an estimate of a population mean. Remembering the sampling distribution for the proportion, the standard deviation was found to be:

![]()

The confidence interval for a population proportion, therefore, becomes:

![]()

![]() is set according to our desired degree of confidence and

is set according to our desired degree of confidence and ![]() is the standard deviation of the sampling distribution.

is the standard deviation of the sampling distribution.

The sample proportions p’ and q’ are estimates of the unknown population proportions p and q. The estimated proportions p’ and q’ are used because p and q are not known.

Remember that as p moves further from 0.5 the binomial distribution becomes less symmetrical. Because we are estimating the binomial with the symmetrical normal distribution the further away from symmetrical the binomial becomes the less confidence we have in the estimate.

This conclusion can be demonstrated through the following analysis. Proportions are based upon the binomial probability distribution. The possible outcomes are binary, either “success” or “failure.” This gives rise to a proportion, meaning the percentage of the outcomes that are “successes.” It was shown that the binomial distribution could be fully understood if we knew only the probability of a success in any one trial, called p. The mean and the standard deviation of the binomial were found to be:

![]()

![]()

It was also shown that the binomial could be estimated by the normal distribution if BOTH np AND nq were greater than 5. From the discussion above, it was found that the standardizing formula for the binomial distribution is:

![]()

Which is nothing more than a restatement of the general standardizing formula with appropriate substitutions for ![]() and

and ![]() from the binomial. We can use the standard normal distribution, the reason Z is in the equation, because the normal distribution is the limiting distribution of the binomial. This is another example of the Central Limit Theorem.

from the binomial. We can use the standard normal distribution, the reason Z is in the equation, because the normal distribution is the limiting distribution of the binomial. This is another example of the Central Limit Theorem.

We can now manipulate this formula in just the same way we did for finding the confidence intervals for a mean, but to find the confidence interval for the binomial population parameter, p.

![]()

Where ![]() , the point estimate of p taken from the sample. Notice that p’ has replaced p in the formula. This is because we do not know p, indeed, this is just what we are trying to estimate.

, the point estimate of p taken from the sample. Notice that p’ has replaced p in the formula. This is because we do not know p, indeed, this is just what we are trying to estimate.

x = number of successes.

n = the number in the sample.

![]() , the failure in any trial

, the failure in any trial

Unfortunately, there is no correction factor for cases where the sample size is small so np’ and nq’ must always be greater than 5 to develop an interval estimate for p.

Also written as:

![]()

How to Construct a Confidence Interval for Population Proportion

Check Your Understanding: How to Construct a Confidence Interval for Population Proportion

Estimate the Required Sample Size for Testing

In this section, you will learn how to calculate sample size with continuous and binary random samples. by reading each description along with watching the videos included. Also, short problems to check your understanding are included.

Calculating the Sample Size n: Continuous and Binary Random Variables

Continuous Random Variables

Usually, we have no control over the sample size of a data set. However, if we are able to set the sample size, as in cases where we are taking a survey, it is very helpful to know just how large it should be to provide the most information. Sampling can be very costly in both time and product. Simple telephone surveys will cost approximately $30.00 each, for example, and some sampling requires the destruction of the product.

If we go back to our standardizing formula for the sampling distribution for means, we can see that it is possible to solve it for n. If we do this, we have ![]() in the denominator.

in the denominator.

![]()

Because we have not taken a sample, yet we do not know any of the variables in the formula except that we can set ![]() to the level of confidence we desire just as we did when determining confidence intervals. If we set a predetermined acceptable error, or tolerance, for the difference between

to the level of confidence we desire just as we did when determining confidence intervals. If we set a predetermined acceptable error, or tolerance, for the difference between ![]() in the formula, we are much further in solving for the sample size n. We still do not know the population standard deviation,

in the formula, we are much further in solving for the sample size n. We still do not know the population standard deviation, ![]() . In practice, a pre-survey is usually done which allows for fine tuning the questionnaire and will give a sample standard deviation that can be used. In other cases, previous information from other surveys may be used for

. In practice, a pre-survey is usually done which allows for fine tuning the questionnaire and will give a sample standard deviation that can be used. In other cases, previous information from other surveys may be used for ![]() in the formula. While crude, this method of determining the sample size may help in reducing cost significantly. If will be the actual data gathered that determines the inferences about the population, so caution in the sample size is appropriate calling for high levels of confidence and small sampling errors.

in the formula. While crude, this method of determining the sample size may help in reducing cost significantly. If will be the actual data gathered that determines the inferences about the population, so caution in the sample size is appropriate calling for high levels of confidence and small sampling errors.

Binary Random Variables

What was done in cases when looking for the mean of a distribution can also be done when sampling to determine the population parameter p for proportions. Manipulation of the standardizing formula for proportions gives:

![]()

Where ![]() , and is the acceptable sampling error, or tolerance, for this application. This will be measured in percentage points.

, and is the acceptable sampling error, or tolerance, for this application. This will be measured in percentage points.

In this case the very object of our search is in the formula, p, and of course q because ![]() . This result occurs because the binomial distribution is a one parameter distribution. If we know p then we know the mean and the standard deviation. Therefore, p shows up in the standard deviation of the sampling distribution which is where we got this formula. If, in an abundance of caution, we substitute 0.5 for p we will draw the largest required sample size that will provided the level of confidence specified by

. This result occurs because the binomial distribution is a one parameter distribution. If we know p then we know the mean and the standard deviation. Therefore, p shows up in the standard deviation of the sampling distribution which is where we got this formula. If, in an abundance of caution, we substitute 0.5 for p we will draw the largest required sample size that will provided the level of confidence specified by ![]() and the tolerance we have selected. This is true because of all combinations of two fractions that add to one, the largest multiple is when each is 0.5. Without any other information concerning the population parameter p, this is the common practice. This may result in oversampling, but certainly not under sampling, thus, this is a cautious approach.

and the tolerance we have selected. This is true because of all combinations of two fractions that add to one, the largest multiple is when each is 0.5. Without any other information concerning the population parameter p, this is the common practice. This may result in oversampling, but certainly not under sampling, thus, this is a cautious approach.

There is an interesting trade-off between the level of confidence and the sample size that shows up here when considering the cost of sampling. Table 4 shows the appropriate sample size at different levels of confidence and different level of the acceptable error, or tolerance.

| Required sample size (90%) | Required sample size (95%) | Tolerance level |

|---|---|---|

| 1691 | 2401 | 2% |

| 752 | 1067 | 3% |

| 271 | 384 | 5% |

| 68 | 96 | 10% |

Table 4 is designed to show the maximum sample size required at different levels of confidence given an assumed ![]() as discussed above.

as discussed above.

The acceptable error, called tolerance in the table, is measured in plus or minus values from the actual proportion. For example, an acceptable error of 5% means that if the sample proportion was found to be 26 percent, the conclusion would be that the actual population proportion is between 21 and 31 percent with a 90 percent level of confidence if a sample of 271 had been taken. Likewise, if the acceptable error was set at 2%, then the population proportion would be between 24 and 28 percent with a 90 percent level of confidence but would require that the sample size be increased from 271 to 1,691. If we wished a higher level of confidence, we would require a larger sample size. Moving from a 90 percent level of confidence to a 95 percent level at a plus or minus 5% tolerance requires changing the sample size from 271 to 384. A very common sample size often seen reported in political surveys is 384. With the survey results it is frequently stated that the results are good to a plus or minus 5% level of “accuracy”.

Example: Suppose a mobile phone company wants to determine the current percentage of customers aged 50+ who use text messaging on their cell phones. How many customers aged 50+ should the company survey in order to be 90% confident that the estimated (sample) proportion is within three percentage points of the true population proportion of customers aged 50+ who use text messaging on their cell phones.

Solution: From the problem, we know that the acceptable error, e, is 0.03 (3% = 0.03) and ![]() , because the confidence level is 90%. The acceptable error, e, is the difference between the actual population proportion p, and the sample proportion we expect to get from the sample.

, because the confidence level is 90%. The acceptable error, e, is the difference between the actual population proportion p, and the sample proportion we expect to get from the sample.

However, in order to find n, we need to know the estimated (sample) proportion p’. Remember that ![]() . But we do not know p’ yet. Since we multiply p’ and q’ together, we make them both equal to 0.5 because

. But we do not know p’ yet. Since we multiply p’ and q’ together, we make them both equal to 0.5 because ![]() results in the largest possible product. (Try other products:

results in the largest possible product. (Try other products: ![]() and so on). The largest possible product gives us the largest n. This gives us a large enough sample so that we can be 90% confident that we are within three percentage points of the true population proportion. To calculate the sample size n, use the formula and make the substitutions.

and so on). The largest possible product gives us the largest n. This gives us a large enough sample so that we can be 90% confident that we are within three percentage points of the true population proportion. To calculate the sample size n, use the formula and make the substitutions.

![]()

Round the answer to the next higher value. The sample size should be 752 cell phone customers aged 50+ in order to be 90% confident that the estimated (sample) proportion is within three percentage points of the true population proportion of all customers aged 50+ who use text messaging on their cell phones.

Estimation and Confidence Intervals: Calculate Sample Size

Calculating Sample size to Predict a Population Proportion

Use Specific Significance Tests Including, Z-Test, T-Test (one and two samples), Chi-Squared Test

In this section, you will learn the fundamentals of hypothesis testing along with hypothesis testing with errors by reading each description along with watching the videos. Also, short problems to check your understanding are included.

Hypothesis Testing with One Sample

Statistical testing is part of a much larger process known as the scientific method. The scientific method, briefly, states that only by following a careful and specific process can some assertion be included in the accepted body of knowledge. This process begins with a set of assumptions upon which a theory, sometimes called a model, is built. This theory, if it has any validity, will lead to predictions; what we call hypotheses.

Statistics and statisticians are not necessarily in the business of developing theories, but in the business of testing others’ theories. Hypotheses come from these theories based upon an explicit set of assumptions and sound logic. The hypothesis comes first, before any data are gathered. Data do not create hypotheses; they are used to test them. If we bear this in mind as we study this section, the process of forming and testing hypotheses will make more sense.

One job of a statistician is to make statistical inferences about populations based on samples taken from the population. Confidence intervals are one way to estimate a population parameter. Another way to make a statistical inference is to make a decision about the value of a specific parameter. For instance, a car dealer advertises that its new small truck gets 35 miles per gallon, on average. A tutoring service claims that its method of tutoring helps 90% of its students get an A or a B. A company says that women managers in their company earn an average of $60,000 per year.

A statistician will make a decision about these claims. This process is called "hypothesis testing." A hypothesis test involves collecting data from a sample and evaluating the data. Then, the statistician makes a decision as to whether or not there is sufficient evidence, based upon analyses of the data, to reject the null hypothesis.

Hypothesis Testing: The Fundamentals

Null and Alternative Hypotheses

The actual test begins by considering two hypotheses. They are called the null hypothesis and the alternative hypothesis. These hypotheses contain opposing viewpoints.

![]() The null hypothesis: It is a statement of no difference between the variables–they are not related. This can often be considered the status quo and as a result if you cannot accept the null, it requires some action.

The null hypothesis: It is a statement of no difference between the variables–they are not related. This can often be considered the status quo and as a result if you cannot accept the null, it requires some action.

![]() The alternative hypothesis: It is a claim about the population that is contradictory to

The alternative hypothesis: It is a claim about the population that is contradictory to ![]() and what we conclude when we cannot accept

and what we conclude when we cannot accept ![]() . This is usually what the researcher is trying to prove. The alternative hypothesis is the contender and must win with significant evidence to overthrow the status quo. This concept is sometimes referred to the tyranny of the status quo because as we will see later, to overthrow the null hypothesis takes usually 90 or greater confidence that this is the proper decision.

. This is usually what the researcher is trying to prove. The alternative hypothesis is the contender and must win with significant evidence to overthrow the status quo. This concept is sometimes referred to the tyranny of the status quo because as we will see later, to overthrow the null hypothesis takes usually 90 or greater confidence that this is the proper decision.

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are “cannot accept ![]() ” if the sample information favors the alternative hypothesis or “do not reject

” if the sample information favors the alternative hypothesis or “do not reject ![]() ” or “decline to reject

” or “decline to reject ![]() ” if the sample information is insufficient to reject the null hypothesis. These conclusions are all based upon a level of probability, a significance level, that is set by the analyst.

” if the sample information is insufficient to reject the null hypothesis. These conclusions are all based upon a level of probability, a significance level, that is set by the analyst.

Table 5 presents the various hypotheses in the relevant pairs. For example, if the null hypothesis is equal to some value, the alternative has to be not equal to that value.

| Equal (=) | Not equal |

| Greater than or equal to |

Less than (<) |

| Less than or equal to |

More than (>) |

Note: As a mathematical convention ![]() always has a symbol with an equal in it.

always has a symbol with an equal in it. ![]() never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test.

never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test.

Example 1:

![]() : No more than 30% of the registered voters in Santa Clara County voted in the primary election.

: No more than 30% of the registered voters in Santa Clara County voted in the primary election. ![]()

![]() : More than 30% of the registered voters in Santa Clara County voted in the primary election.

: More than 30% of the registered voters in Santa Clara County voted in the primary election. ![]()

Example 2: We wants to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are:

![]()

![]()

Example 3: We want to test if college students take less than five years to graduate from college, on the average. The null and alternative hypotheses are:

![]()

![]()

Hypothesis Testing: Setting up the Null and Alternative Hypothesis Statements

Outcomes and the Type I and Type II Errors

When you perform a hypothesis test, there are four possible outcomes depending on the actual truth (or falseness) of the null hypothesis ![]() and the decision to reject or not. The outcomes are summarized in Table 6:

and the decision to reject or not. The outcomes are summarized in Table 6:

| Statistical Decision | |

blank cell |

|---|---|---|

| True | False | |

| Cannot reject |

Correct outcome | Type II error |

| Cannot accept |

Type I error | Correct outcome |

The four possible outcomes in the table are:

-

-

-

- The decision is cannot reject

when

when  is true (correct decision).

is true (correct decision). - The decision is cannot accept

when

when  is true (incorrect decision known as a Type I error). This case is described as “rejecting a good null”. As we will see later, it is this type of error that we will guard against by setting the probability of making such an error. The goal is to NOT take an action that is an error.

is true (incorrect decision known as a Type I error). This case is described as “rejecting a good null”. As we will see later, it is this type of error that we will guard against by setting the probability of making such an error. The goal is to NOT take an action that is an error. - The decision is cannot reject

when, in fact,

when, in fact,  is false (incorrect decision known as a Type II error). This is called “accepting a false null”. In this situation you have allowed the status quo to remain in force when it should be overturned. As we will see, the null hypothesis has the advantage in competition with the alternative.

is false (incorrect decision known as a Type II error). This is called “accepting a false null”. In this situation you have allowed the status quo to remain in force when it should be overturned. As we will see, the null hypothesis has the advantage in competition with the alternative. - The decision is cannot accept

when

when  is false (correct decision).

is false (correct decision).

- The decision is cannot reject

-

-

Each of the errors occurs with a particular probability. The Greek letters ![]() and

and ![]() represent the probabilities.

represent the probabilities.

![]() probability of a Type I error = P(Type I error) = probability of rejecting the null hypothesis when the null hypothesis is true: rejecting a good null.

probability of a Type I error = P(Type I error) = probability of rejecting the null hypothesis when the null hypothesis is true: rejecting a good null.

![]() probability of a Type II error = P(Type II error) = probability of not rejecting the null hypothesis when the null hypothesis is false.

probability of a Type II error = P(Type II error) = probability of not rejecting the null hypothesis when the null hypothesis is false. ![]() is called the Power of the Test.

is called the Power of the Test.

![]() should be as small as possible because they are probabilities of errors.

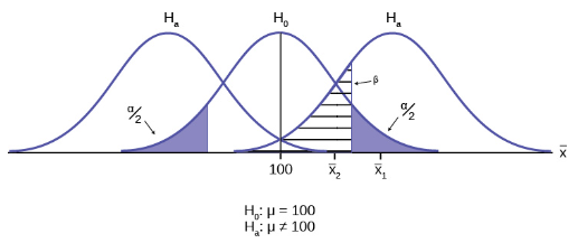

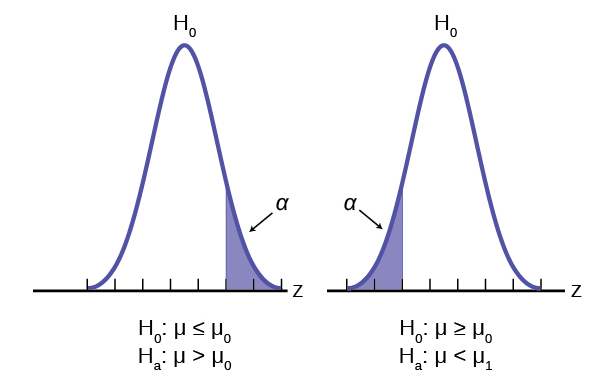

should be as small as possible because they are probabilities of errors.