14.3 Quasi-experimental designs

Learning Objectives

Learners will be able to…

- Describe a quasi-experimental design in social work research

- Understand the different types of quasi-experimental designs

- Determine what kinds of research questions quasi-experimental designs are suited for

- Discuss advantages and disadvantages of quasi-experimental designs

Quasi-experimental designs are a lot more common in social work research than true experimental designs. Although quasi-experiments don’t do as good a job of mitigating threats to internal validity, they still allow us to establish temporality, which is a criterion for establishing nomothetic causality. The prefix quasi means “resembling,” so quasi-experimental research is research that resembles experimental research, but is not true experimental research. Nonetheless, given proper attention, quasi-experiments can still provide rigorous and useful results.

The primary difference between quasi-experimental research and true experimental research is that quasi-experimental research does not involve random assignment to control and experimental groups. Instead, we talk about comparison groups in quasi-experimental research. As a result, these types of experiments don’t control for extraneous variables as well as true experiments do. As a result, there are larger threats to internal validity in quasi-experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. Realistically, our example of the CBT-social anxiety project is likely to be a quasi experiment, based on the resources and participant pool we’re likely to have available. There are different kinds of quasi-experiments, and we will discuss three main types below: nonequivalent comparison group designs, time series designs, and ex post facto comparison group designs.

Nonequivalent comparison group design

This type of design looks very similar to the classical experimental design that we discussed in section 14.2. But instead of random assignment to control and experimental groups, researchers use other methods to construct their comparison and experimental groups. Researchers using this design will try to select a comparison group that’s as similar to the experimental group as possible based on relevant factors to their experimental group.

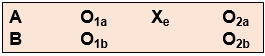

A diagram of this design will also look very similar to pretest/post-test design, but you’ll notice we’ve removed the “R” from our groups, since they are not randomly assigned (Figure 14.6).

This kind of design provides weaker evidence that the intervention itself leads to a change in outcome. Nonetheless, we are still able to establish time order using this method, and can thereby show an association between the intervention and the outcome. Like true experimental designs, this type of quasi-experimental design is useful for explanatory research questions.

What might this look like in a practice setting? Let’s say you’re working at an agency that provides CBT and other types of interventions, and you have identified a group of clients who are seeking help for social anxiety, as in our earlier example. Once you’ve obtained consent from your clients, you can create a comparison group using one of the matching methods we just discussed. If the group is small, you might match using individual matching, but if it’s larger, you’ll probably sort people by demographics to try to get similar population profiles. (You can do aggregate matching more easily when your agency has some kind of electronic records or database, but it’s still possible to do manually.)

Static-group design

Another type of quasi-experimental research is the static-group design. In this type of research, there are both comparison and experimental groups, which are not randomly assigned. There is no pretest, only a post-test, and the comparison group has to be constructed by the researcher. Sometimes, researchers will use matching techniques to construct the groups, but often, the groups are constructed by convenience of who is being served at an agency.

Ex post facto comparison group design

Ex post facto (Latin for “after the fact”) designs are extremely similar to nonequivalent comparison group designs. There are still comparison and experimental groups, pretest and post-test measurements, and an intervention. But in ex post facto designs, participants are assigned to the comparison and experimental groups once the intervention has already happened. This type of design often occurs when interventions are already up and running at an agency and the agency wants to assess effectiveness based on people who have already completed treatment.

In most clinical agency environments, social workers conduct both initial and exit assessments, so there are usually some kind of pretest and post-test measures available. We also typically collect demographic information about our clients, which could allow us to try to use some kind of matching to construct comparison and experimental groups.

In terms of internal validity and establishing causality, ex post facto designs are a bit of a mixed bag. The ability to establish causality depends partially on the ability to construct comparison and experimental groups that are demographically similar so we can control for these extraneous variables.

Propensity Score Matching

There are more advanced ways to match participants in the experimental and comparison groups based on statistical analyses. Researchers using a quasi-experimental design may consider using a matching algorithm to select people for the experimental and comparison groups based on their similarity on key variables (or “covariates”). This allows the assignment be considered “as good as random” after conditioning on the covariates.

Propensity Score Matching (PSM, Rosenbaum & Rubin, 1983)[1] is one such algorithm in which the probability of being assigned to the treatment group can be modeled as a function of several covariates using logistic regression. However, to use Propensity Score Matching, researchers need a relatively large initial sample because the technique reduces the final sample during the statistical matching process. The need for the large sample means Propensity Score Matching may not be feasible for all projects.

Time series design

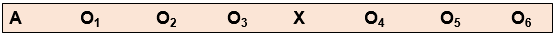

Another type of quasi-experimental design is a time series design. Unlike other types of experimental design, time series designs do not have a comparison group. A time series is a set of measurements taken at intervals over a period of time (Figure 14.7). Proper time series design should include at least three pre- and post-intervention measurement points. While there are a few types of time series designs, we’re going to focus on the most common: interrupted time series design.

But why use this method? Here’s an example. Let’s think about elementary student behavior throughout the school year. As anyone with children or who is a teacher knows, kids get very excited and animated around holidays, days off, or even just on a Friday afternoon. This fact might mean that around those times of year, there are more reports of disruptive behavior in classrooms. What if we took our one and only measurement in mid-December? It’s possible we’d see a higher-than-average rate of disruptive behavior reports, which could bias our results if our next measurement is around a time of year students are in a different, less excitable frame of mind. When we take multiple measurements throughout the first half of the school year, we can establish a more accurate baseline for the rate of these reports by looking at the trend over time.

We may want to test the effect of extended recess times in elementary school on reports of disruptive behavior in classrooms. When students come back after the winter break, the school extends recess by 10 minutes each day (the intervention), and the researchers start tracking the monthly reports of disruptive behavior again. These reports could be subject to the same fluctuations as the pre-intervention reports, and so we once again take multiple measurements over time to try to control for those fluctuations.

This method improves the extent to which we can establish causality because we are accounting for a major extraneous variable in the equation—the passage of time. On its own, it does not allow us to account for other extraneous variables, but it does establish time order and association between the intervention and the trend in reports of disruptive behavior. Finding a stable condition before the treatment that changes after the treatment is evidence for causality between treatment and outcome.

Conclusion

Quasi-experimental designs are common in social work intervention research because, when designed correctly, they balance the intense resource needs of true experiments with the realities of research in practice. They still offer researchers tools to gather robust evidence about whether interventions are having positive effects for clients.

Key Takeaways

- Quasi-experimental designs are similar to true experiments, but do not require random assignment to experimental and control groups.

- In quasi-experimental projects, the group not receiving the treatment is called the comparison group, not the control group.

- Nonequivalent comparison group design is nearly identical to pretest/post-test experimental design, but participants are not randomly assigned to the experimental and control groups. As a result, this design provides slightly less robust evidence for causality.

- Time series design does not have a control or experimental group, and instead compares the condition of participants before and after the intervention by measuring relevant factors at multiple points in time. This allows researchers to mitigate the error introduced by the passage of time.

- Ex post facto comparison group designs are also similar to true experiments, but experimental and comparison groups are constructed after the intervention is over. This makes it more difficult to control for the effect of extraneous variables, but still provides useful evidence for causality because it maintains the

- A priori categories

-

Categories that we use that are determined ahead of time, based on existing literature/knowledge.

- Abstract

-

a summary of the main points of an article

- Access

-

whether you can actually reach people or documents needed to complete your project

- Accountability

-

The idea that researchers are responsible for conducting research that is ethical, honest, and following accepted research practices.

- Acquiescence bias

-

In a measure, when people say yes to whatever the researcher asks, even when doing so contradicts previous answers.

- Action research

-

research that is conducted for the purpose of creating social change

- Afrocentric methodologies

-

Research methodologies that center and affirm African cultures, knowledge, beliefs, and values.

- Aggregate matching

-

In nonequivalent comparison group designs, the process in which researchers match the population profile of the comparison and experimental groups.

- Aim

-

what a researcher hopes to accomplish with their study

- Alternate/multiple forms reliability

-

A type of reliability in which multiple forms of a tool yield the same results from the same participants.

- Annotation

-

the process of writing notes on an article

- Anonymity

-

The identity of the person providing data cannot be connected to the data provided at any time in the research process, by anyone.

- ANOVA

-

A statistical method to examine how a dependent variable changes as the value of a categorical independent variable changes

- Applicability

-

The potential for qualitative research findings to be applicable to other situations or with other people outside of the research study itself.

- Argument

-

a statement about what you think is true backed up by evidence and critical thinking

- Artifacts

-

Artifacts are a source of data for qualitative researcher that exist in some form already, without the research having to create it. They represent a very broad category that can range from print media, to clothing, to tools, to art, to live performances.

- Assent

-

Comparable to informed consent for BUT this is for someone (e.g. child, teen, or someone with a cognitive impairment) who can’t legally give full consent but can determine if they are willing to participant. May or may not require researchers to collect an assent form, this could also be done verbally.

- Assumptions

-

The characteristics we assume about our data, like that it is normally distributed, that makes it suitable for certain types of statistical tests

- Attributes

-

The characteristics that make up a variable

- Audit trail

-

An audit trail is a system of documenting in qualitative research analysis that allows you to link your final results with your original raw data. Using an audit trail, an independent researcher should be able to start with your results and trace the research process backwards to the raw data. This helps to strengthen the trustworthiness of the research.

- Authenticity

-

For the purposes of research, authenticity means that we do not misrepresent ourselves, our interests or our research; we are genuine in our interactions with participants and other colleagues.

- Availability sampling

-

also called convenience sampling; researcher gathers data from whatever cases happen to be convenient or available

- Axial coding

-

Axial coding is phase of qualitative analysis in which the research will revisit the open codes and identify connections between codes, thereby beginning to group codes that share a relationship.

- Axiology

-

assumptions about the role of values in research

- Baseline stage

-

The stage in single-subjects design in which a baseline level or pattern of the dependent variable is established

- Beneficence

-

One of the three values indicated in the Belmont report. An obligation to protect people from harm by maximizing benefits and minimizing risks.

- Bias

-

Biases are conscious or subconscious preferences that lead us to favor some things over others.

- Bimodal distribution

-

A distribution with two distinct peaks when represented on a histogram.

- Bipolar scale

-

A rating scale in which a respondent selects their alignment of choices between two opposite poles such as disagreement and agreement (e.g., strongly disagree, disagree, agree, strongly agree).

- Bivariate analysis

-

a group of statistical techniques that examines the relationship between two variables

- Boolean search

-

A Boolean search is a structured system that uses modifying terms (AND, OR, NOT) and symbols such as quotation marks and asterisks to modify, broaden, or restrict the search results

- Bracketing

-

A qualitative research technique where the researcher attempts to capture and track their subjective assumptions during the research process. * note, there are other definitions of bracketing, but this is the most widely used.

- BRUSO model

-

An acronym, BRUSO for writing questions in survey research. The letters stand for: “brief,” “relevant,” “unambiguous,” “specific,” and “objective.”

- Case studies

-

Case studies are a type of qualitative research design that focus on a defined case and gathers data to provide a very rich, full understanding of that case. It usually involves gathering data from multiple different sources to get a well-rounded case description.

- Categorical variables

-

variables whose values are organized into mutually exclusive groups but whose numerical values cannot be used in mathematical operations.

- Causality

-

the idea that one event, behavior, or belief will result in the occurrence of another, subsequent event, behavior, or belief

- Census

-

A census is a study of every element in a population (as opposed of taking a sample of the population)

- Chi-square test for independence

-

a statistical test to determine whether there is a significant relationship between two categorical variables

- Closed-ended questions

-

questions in which the researcher provides all of the response options

- Cluster sampling

-

a sampling approach that begins by sampling groups (or clusters) of population elements and then selects elements from within those groups

- Code

-

A code is a label that we place on segment of data that seems to represent the main idea of that segment.

- Codebook

-

A document that we use to keep track of and define the codes that we have identified (or are using) in our qualitative data analysis.

- Coding

-

Part of the qualitative data analysis process where we begin to interpret and assign meaning to the data.

- Coercion

-

When a participant faces undue or excess pressure to participate by either favorable or unfavorable means, this is known as coercion and must be avoided by researchers

- Cognitive biases

-

predictable flaws in thinking

- Cohort survey

-

A type of longitudinal design where participants are selected because of a defining characteristic that the researcher is interested in studying. The same people don’t necessarily participate from year to year, but all participants must meet whatever categorical criteria fulfill the researcher’s primary interest.

- Common method bias

-

Common method bias refers to the amount of spurious covariance shared between independent and dependent variables that are measured at the same point in time.

- Community gatekeeper

-

Someone who has the formal or informal authority to grant permission or access to a particular community.

- Comparison group

-

the group of participants in our study who do not receive the intervention we are researching in experiments without random assignment

- Composite measures

-

measurements of variables based on more than one one indicator

- Computer Assisted Qualitative Data Analysis Software (CAQDAS)

-

These are software tools that can aid qualitative researchers in managing, organizing and manipulating/analyzing their data.

- Concept

-

A mental image that summarizes a set of similar observations, feelings, or ideas

- Conceptualization

-

developing clear, concise definitions for the key concepts in a research question

- Concurrent validity

-

A type of criterion validity. Examines how well a tool provides the same scores as an already existing tool administered at the same point in time.

- Conditions

-

The different levels of the independent variable in an experimental design.

- Confidence interval

-

a range of values in which the true value is likely to be, to provide a more accurate description of their data

- Confidentiality

-

For research purposes, confidentiality means that only members of the research team have access potentially identifiable information that could be associated with participant data. According to confidentiality, it is the research team's responsibility to restrict access to this information by other parties, including the public.

- Confirmation bias

-

observing and analyzing information in a way that agrees with what you already think is true and excludes other alternatives

- Conflicts of interest

-

Conflicting allegiances.

- Confound

-

a variable whose influence makes it difficult to understand the relationship between an independent and dependent variable

- Consistency

-

Consistency is the idea that we use a systematic (and potentially repeatable) process when conducting our research.

- Constant

-

a characteristic that does not change in a study

- Constant comparison

-

Constant comparison reflects the motion that takes place in some qualitative analysis approaches whereby the researcher moves back and forth between the data and the emerging categories and evolving understanding they have in their results. They are continually checking what they believed to be the results against the raw data they are working with.

- Construct bias

-

"when the construct measured is not identical across cultures or when behaviors that characterize the construct are not identical across cultures" (Meiring et al., 2005, p. 2)

- Constructivist

-

Constructivist research is a qualitative design that seeks to develop a deep understanding of the meaning that people attach to events, experiences, or phenomena.

- Constructs

-

Conditions that are not directly observable and represent states of being, experiences, and ideas.

- Content

-

Content is the substance of the artifact (e.g. the words, picture, scene). It is what can actually be observed.

- Content analysis

-

An approach to data analysis that seeks to identify patterns, trends, or ideas across qualitative data through processes of coding and categorization.

- Content validity

-

The extent to which a measure “covers” the construct of interest, i.e., it's comprehensiveness to measure the construct.

- Context

-

Context is the circumstances surrounding an artifact, event, or experience.

- Context effects

-

unintended influences on respondents’ answers because they are not related to the content of the item but to the context in which the item appears.

- Context-dependent

-

Research findings are applicable to the group of people who contributed to the knowledge building and the situation in which it took place.

- Contingency table

-

a visual representation of across-tabulation of categorical variables to demonstrate all the possible occurrences of categories

- Continuing education units

-

required courses clinical practitioners must take in order to remain current with licensure

- Continuous variables

-

variables whose values are mutually exclusive and can be used in mathematical operations

- Control

-

In research design and statistics, a series of methods that allow researchers to minimize the effect of an extraneous variable on the dependent variable in their project.

- Control group

-

the group of participants in our study who do not receive the intervention we are researching in experiments with random assignment

- Control variable

-

a confounding variable whose effects are accounted for mathematically in quantitative analysis to isolate the relationship between an independent and dependent variable

- Convenience sampling

-

also called availability sampling; researcher gathers data from whatever cases happen to be convenient or available

- Correlation

-

a relationship between two variables in which their values change together.

- Correlation coefficient

-

a statistically derived value between -1 and 1 that tells us the magnitude and direction of the relationship between two variables

- correlational research

-

a non-experimental type of quantitative research that examines associations between variables that have not been manipulated

- Covariation

-

when the values of two variables change at the same time

- Coverage

-

In qualitative data, coverage refers to the amount of data that can be categorized or sorted using the code structure that we are using (or have developed) in our study. With qualitative research, our aim is to have good coverage with our code structure.

- Criterion validity

-

The extent to which people’s scores on a measure are correlated with other variables (known as criteria) that one would expect them to be correlated with.

- Critical information literacy

-

a theory and practice that critiques the ways in which systems of power shape the creation, distribution, and reception of information

- Critical paradigm

-

a paradigm in social science research focused on power, inequality, and social change

- Cronbach's alpha

-

Statistical measure used to asses the internal consistency of an instrument.

- Cross-sectional survey

-

When a researcher collects data only once from participants using a questionnaire

- Cultural bias

-

spurious covariance between your independent and dependent variables that is in fact caused by systematic error introduced by culturally insensitive or incompetent research practices

- Cultural equivalence

-

the concept that scores obtained from a measure are similar when employed in different cultural populations

- Damaged-centered research

-

Research that portrays groups of people or communities as flawed, surrounded by problems, or incapable of producing change.

- Data analysis plan

-

An ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact analyses, step-by-step, that you plan to run to answer your research question.

- Data collection protocol

-

A plan that is developed by a researcher, prior to commencing a research project, that details how data will be collected, stored and managed during the research project.

- Data dictionary

-

This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn't obvious.

- Data matrix

-

A data matrix is a tool used by researchers to track and organize data and findings during qualitative analysis.

- Data triangulation

-

Including data from multiple sources to help enhance your understanding of a topic

- Database

-

a searchable collection of information

- Debriefing statement

-

A statement at the end of data collection (e.g. at the end of a survey or interview) that generally thanks participants and reminds them what the research was about, what it's purpose is, resources available to them if they need them, and contact information for the researcher if they have questions or concerns.

- Decision-rule

-

A decision-rule provides information on how the researcher determines what code should be placed on an item, especially when codes may be similar in nature.

- Decolonizing methodologies

-

Research methods that reclaim control over indigenous ways of knowing and being.

- Deconstructing data

-

The act of breaking piece of qualitative data apart during the analysis process to discern meaning and ultimately, the results of the study.

- Deductive

-

The type of research in which a specific expectation is deduced from a general premise and then tested

- Deductive analysis

-

An approach to data analysis in which the researchers begins their analysis using a theory to see if their data fits within this theoretical framework (tests the theory).

- Deductive reasoning

-

starts by reading existing theories, then testing hypotheses and revising or confirming the theory

- Dependent variable

-

a variable that depends on changes in the independent variable

- Descriptive research

-

research that describes or defines a particular phenomenon

- Descriptive statistics

-

A technique for summarizing and presenting data.

- Dichotomous

-

Participants are asked to select one of two possible choices, such as true/false, yes/no, or agree/disagree.

- Dichotomous response question

-

Participants are asked to select one of two possible choices, such as true/false, yes/no, or agree/disagree.

- Diminished autonomy

-

Having the ability to make decisions for yourself limited

- Direct association

-

Occurs when two variables move together in the same direction - as one increases, so does the other, or, as one decreases, so does the other

- Discipline

-

an academic field, like social work

- Discrete variables

-

Variables with finite value choices.

- Discriminant validity

-

The extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct.

- Dissemination

-

“a planned process that involves consideration of target audiences and the settings in which research findings are to be received and, where appropriate, communicating and interacting with wider policy and…service audiences in ways that will facilitate research uptake in decision-making processes and practice” (Wilson, Petticrew, Calnan, & Natareth, 2010, p. 91)

- Dissemination plan

-

how you plan to share your research findings

- Dissemination strategy

-

How you plan to share your research findings

- Distribution

-

the way the scores are distributed across the levels of that variable.

- Document analysis

-

The analysis of documents (or other existing artifacts) as a source of data.

- Double-barreled question

-

a question that asks more than one thing at a time, making it difficult to respond accurately

- Dyads

-

A combination of two people or objects

- Effectiveness

-

The performance of an intervention under "real-world" conditions that are not closely controlled and ideal

- Efficacy

-

performance of an intervention under ideal and controlled circumstances, such as in a lab or delivered by trained researcher-interventionists

- Element

-

individual units of a population

- Emergent design

-

Emergent design is the idea that some decision in our research design will be dynamic and change as our understanding of the research question evolves as we go through the research process. This is (often) evident in qualitative research, but rare in quantitative research.

- Emphasis in mixed methods research

-

in mixed methods research, this refers to the order in which each method is used, either concurrently or sequentially

- Empirical articles

-

report the results of a quantitative or qualitative data analysis conducted by the author

- Empirical data

-

information about the social world gathered and analyzed through scientific observation or experimentation

- Empirical questions

-

research questions that can be answered by systematically observing the real world

- Epistemic injustice

-

when someone is treated unfairly in their capacity to know something or describe their experience of the world

- Epistemology

-

assumptions about how we come to know what is real and true

- Equity-informed research agenda

-

A general approach to research that is conscientious of the dynamics of power and control created by the act of research and attempts to actively address these dynamics through the process and outcomes of research.

- Essence

-

Often the end result of a phenomological study, this is a description of the lived experience of the phenomenon being studied.

- Ethical questions

-

unsuitable research questions which are not answerable by systematic observation of the real world but instead rely on moral or philosophical opinions

- Ethnography

-

Ethnography is a qualitative research design that is used when we are attempting to learn about a culture by observing people in their natural environment.

- Evaluation research

-

research that evaluates the outcomes of a policy or program

- Evidence-based practice

-

a process composed of "four equally weighted parts: 1) current client needs and situation, (2) the best relevant research evidence, (3) client values and preferences, and (4) the clinician’s expertise" (Drisko & Grady, 2015, p. 275)

- Ex post facto

-

After the fact

- Exclusion criteria

-

characteristics that disqualify a person from being included in a sample

- Exempt review

-

Exempt review is the lowest level of review. Studies that are considered exempt expose participants to the least potential for harm and often involve little participation by human subjects.

- Exhaustive categories

-

Exhaustive categories are options for closed ended questions that allow for every possible response (no one should feel like they can't find the answer for them).

- Expanded field notes

-

Expanded field notes represents the field notes that we have taken during data collection after we have had time to sit down and add details to them that we were not able to capture immediately at the point of collection.

- Expedited review

-

Expedited review is the middle level of review. Studies considered under expedited review do not have to go before the full IRB board because they expose participants to minimal risk. However, the studies must be thoroughly reviewed by a member of the IRB committee.

- Experiment

-

an operation or procedure carried out under controlled conditions in order to discover an unknown effect or law, to test or establish a hypothesis, or to illustrate a known law.

- Experimental condition

-

treatment, intervention, or experience that is being tested in an experiment (the independent variable) that is received by the experimental group and not by the control group.

- Experimental design

-

Refers to research that is designed specifically to answer the question of whether there is a causal relationship between two variables.

- Experimental group

-

in experimental design, the group of participants in our study who do receive the intervention we are researching

- Explanatory research

-

explains why particular phenomena work in the way that they do; answers “why” questions

- Exploratory research

-

conducted during the early stages of a project, usually when a researcher wants to test the feasibility of conducting a more extensive study or if the topic has not been studied in the past

- External audit

-

Having an objective person, someone not connected to your study, try to start with your findings and trace them back to your raw data using your audit trail. A tool to help demonstrate rigor in qualitative research.

- External validity

-

This is a synonymous term for generalizability - the ability to apply the findings of a study beyond the sample to a broader population.

- Extraneous variables

-

variables and characteristics that have an effect on your outcome, but aren't the primary variable whose influence you're interested in testing.

- Extreme (or deviant) case sampling

-

A purposive sampling strategy that selects a case(s) that represent extreme or underrepresented perspectives. It is a way of intentionally focusing on or representing voices that may not often be heard or given emphasis.

- Face validity

-

The extent to which a measurement method appears “on its face” to measure the construct of interest

- False negative

-

when a measure does not indicate the presence of a phenomenon, when in reality it is present

- False positive

-

when a measure indicates the presence of a phenomenon, when in reality it is not present

- Feasibility

-

whether you can practically and ethically complete the research project you propose

- Feminist methodologies:

-

Research methods in this tradition seek to, "remove the power imbalance between research and subject; (are) politically motivated in that (they) seeks to change social inequality; and (they) begin with the standpoints and experiences of women".[2]

- Fence-sitters

-

respondents to a survey who choose neutral response options, even if they have an opinion

- Field notes

-

Notes that are taken by the researcher while we are in the field, gathering data.

- Filter or Screening Questions

-

Questions that screen out/identify a certain type of respondent, usually to direct them to a certain part of the survey.

- Filter question

-

items on a questionnaire designed to identify some subset of survey respondents who are asked additional questions that are not relevant to the entire sample

- Floaters

-

respondents to a survey who choose a substantive answer to a question when really, they don’t understand the question or don’t have an opinion

- Focus group

-

Type of interview where participants answer questions in a group.

- Focus group guide

-

A document that will outline the instructions for conducting your focus group, including the questions you will ask participants. It often concludes with a debriefing statement for the group, as well.

- Focus groups

-

A form of data gathering where researchers ask a group of participants to respond to a series of (mostly open-ended) questions.

- Fraud

-

Deliberate actions taken to impact a research project. For example deliberately falsifying data, plagiarism, not being truthful about the methodology, etc.

- Frequency table

-

A table that lays out how many cases fall into each level of a variable.

- Full board review

-

A full board review will involve multiple members of the IRB evaluating your proposal. When researchers submit a proposal under full board review, the full IRB board will meet, discuss any questions or concerns with the study, invite the researcher to answer questions and defend their proposal, and vote to approve the study or send it back for revision. Full board proposals pose greater than minimal risk to participants. They may also involve the participation of vulnerable populations, or people who need additional protection from the IRB.

- Gatekeeper

-

the people or organizations who control access to the population you want to study

- Generalizability

-

The ability to apply research findings beyond the study sample to some broader population,

- Generalizable findings

-

Findings form a research study that apply to larger group of people (beyond the sample). Producing generalizable findings requires starting with a representative sample.

- Generalize

-

(as in generalization) to make claims about a large population based on a smaller sample of people or items

- Gray literature

-

research reports released by non-commercial publishers, such as government agencies, policy organizations, and think-tanks

- Grounded theory

-

A type of research design that is often used to study a process or identify a theory about how something works.

- Grounded theory analysis

-

A form of qualitative analysis that aims to develop a theory or understanding of how some event or series of events occurs by closely examining

participant knowledge and experience of that event(s). - Guttman scale

-

A composite scale using a series of items arranged in increasing order of intensity of the construct of interest, from least intense to most intense.

- Heterogeneity

-

The quality of or the amount of difference or variation in data or research participants.

- Histogram

-

a graphical display of a distribution.

- Homogeneity

-

The quality of or the amount of similarity or consistency in data or research participants.

- Human instrument

-

As researchers in the social science, we ourselves are the main tool for conducting our studies.

- Human subjects

-

The US Department of Health and Human Services (USDHHS) defines a human subject as “a living individual about whom an investigator (whether professional or student) conducting research obtains (1) data through intervention or interaction with the individual, or (2) identifiable private information” (USDHHS, 1993, para. 1). [2]

- Hypothesis

-

a statement describing a researcher’s expectation regarding what they anticipate finding

- Hypothetico-deductive method

-

A cyclical process of theory development, starting with an observed phenomenon, then developing or using a theory to make a specific prediction of what should happen if that theory is correct, testing that prediction, refining the theory in light of the findings, and using that refined theory to develop new hypotheses, and so on.

- Idiographic causal explanation

-

attempts to explain or describe your phenomenon exhaustively, based on the subjective understandings of your participants

- Idiographic understanding

-

A rich, deep, detailed understanding of a unique person, small group, and/or set of circumstances.

- Impact

-

Tthe long-term condition that occurs at the end of a defined time period after an intervention.

- Implementation science

-

The scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services.

- Importance

-

the impact your study will have on participants, communities, scientific knowledge, and social justice

- Incidence

-

the number or rate of new cases of a phenomenon or condition during a specified time period

- Inclusion criteria

-

Inclusion criteria are general requirements a person must possess to be a part of your sample.

- Independent variable

-

causes a change in the dependent variable

- Index

-

a composite score derived from aggregating measures of multiple concepts (called components) using a set of rules and formulas

- Indicators

-

Clues that demonstrate the presence, intensity, or other aspects of a concept in the real world

- Indirect observables

-

things that require subtle and complex observations to measure, perhaps we must use existing knowledge and intuition to define.

- Individual matching

-

In nonequivalent comparison group designs, the process by which researchers match individual cases in the experimental group to similar cases in the comparison group.

- Inductive

-

inductive reasoning draws conclusions from individual observations

- Inductive analysis

-

An approach to data analysis in which we gather our data first and then generate a theory about its meaning through our analysis.

- Inductive reasoning

-

when a researcher starts with a set of observations and then moves from particular experiences to a more general set of propositions about those experiences

- Information literacy

-

"a set of abilities requiring individuals to 'recognize when information is needed and have the ability to locate, evaluate, and use effectively the needed information" (American Library Association, 2020)

- Information privilege

-

the accumulation of special rights and advantages not available to others in the area of information access

- Informed consent

-

A process through which the researcher explains the research process, procedures, risks and benefits to a potential participant, usually through a written document, which the participant than signs, as evidence of their agreement to participate.

- Institutional Review Board

-

an administrative body established to protect the rights and welfare of human research subjects recruited to participate in research activities conducted under the auspices of the institution with which it is affiliated

- Internal consistency

-

The consistency of people’s responses across the items on a multiple-item measure. Responses about the same underlying construct should be correlated, though not perfectly.

- Internal validity

-

Ability to say that one variable "causes" something to happen to another variable. Very important to assess when thinking about studies that examine causation such as experimental or quasi-experimental designs.

- Interpretivism

-

a paradigm based on the idea that social context and interaction frame our realities

- Interrater reliability

-

The extent to which different observers are consistent in their assessment or rating of a particular characteristic or item.

- Intersectional identities

-

the various aspects or dimensions that come together in forming our identity

- Interval

-

A level of measurement that is continuous, can be rank ordered, is exhaustive and mutually exclusive, and for which the distance between attributes is known to be equal. But for which there is no zero point.

- Interview guide

-

An interview guide is a document that outlines the flow of information during your interview, including a greeting and introduction to orient your participant to the topic, your questions and any probes, and any debriefing statement you might include. If you are part of a research team, your interview guide may also include instructions for the interviewer if certain things are brought up in the interview or as general guidance.

- Interview schedule

-

A questionnaire that is read to respondents

- Interviewer effect

-

any possible changes in interviewee responses based on how or when the researcher presents question-and-answer options

- Interviews

-

A form of data gathering where researchers ask individual participants to respond to a series of (mostly open-ended) questions.

- Intra-rater reliability

-

Type of reliability in which a rater rates something the same way on two different occasions.

- Intraclass correlation coefficient

-

a statistic ranging from 0 to 1 that measures how much outcomes (1) within a cluster are likely to be similar or (2) between different clusters are likely to be different

- Intuition

-

a “gut feeling” about what to do based on previous experience or knowledge

- Intuitions

-

yer gut feelin'

- Inverse association

-

occurs when two variables change in opposite directions - one goes up, the other goes down and vice versa; also called negative association

- Item-order effect

-

when the order in which the items are presented affects people’s responses

- Iterative

-

An iterative approach means that after planning and once we begin collecting data, we begin analyzing as data as it is coming in. This early analysis of our (incomplete) data, then impacts our planning, ongoing data gathering and future analysis as it progresses.

- Iterative process

-

a nonlinear process in which the original product is revised over and over again to improve it

- Justice

-

One of the three ethical principles in the Belmont Report. States that benefits and burdens of research should be distributed fairly.

- Key informant

-

Someone who is especially knowledgeable about a topic being studied.

- Keywords

-

the words or phrases in your search query

- Leading question

-

when a participant's answer to a question is altered due to the way in which a question is written. In essence, the question leads the participant to answer in a specific way.

- Level of measurement

-

The level that describes how data for variables are recorded. The level of measurement defines the type of operations can be conducted with your data. There are four levels: nominal, ordinal, interval, and ratio.

- Likert scale

-

measuring people’s attitude toward something by assessing their level of agreement with several statements about it

- Linear

-

A research process where you create a plan, you gather your data, you analyze your data and each step is completed before you proceed to the next.

- Linear regression

-

a statistical technique that can be used to predict how an independent variable affects a dependent variable in the context of other variables.

- Logic

-

A science that deals with the principles and criteria of validity of inference and demonstration: the science of the formal principles of reasoning.

- Logic model

-

A graphic depiction (road map) that presents the shared relationships among the resources, activities, outputs, outcomes, and impact for your program

- Logical fallacy

-

an error in reasoning related to the underlying logic of an argument or thought

- Longitudinal survey

-

Researcher collects data from participants at multiple points over an extended period of time using a questionnaire.

- Macro-level

-

examining social structures and institutions

- Magnitude

-

The strength of a correlation, determined by the absolute value of a correlation coefficient

- Matrix question

-

a type of survey question that lists a set of questions for which the response options are all the same in a grid layout

- Maximum variation sampling

-

A purposive sampling strategy where you choose cases because they represent a range of very different perspectives on a topic

- Mean

-

Also called the average, the mean is calculated by adding all your cases and dividing the total by the number of cases.

- Measure of central tendency

-

One number that can give you an idea about the distribution of your data.

- Measurement

-

The process by which we describe and ascribe meaning to the key facts, concepts, or other phenomena under investigation in a research study.

- Measurement error

-

The differerence between that value that we get when we measure something and the true value

- Measurement instrument

-

Instrument or tool that operationalizes (measures) the concept that you are studying.

- Median

-

The value in the middle when all our values are placed in numerical order. Also called the 50th percentile.

- Mediating variables

-

Variables that refer to the mechanisms by which an independent variable might affect a dependent variable.

- Member-checking

-

Member checking involves taking your results back to participants to see if we "got it right" in our analysis. While our findings bring together many different peoples' data into one set of findings, participants should still be able to recognize their input and feel like their ideas and experiences have been captured adequately.

- Membership-based approach

-

approach to recruitment where participants are members of an organization or social group with identified membership

- Memoing

-

Memoing is the act of recording your thoughts, reactions, quandaries as you are reviewing the data you are gathering.

- Memorandum of Understanding (MOU) or Memorandum of Agreement (MOA)

-

A written agreement between parties that want to participate in a collaborative project.

- Meso-level

-

level of interaction or activity that exists between groups and within communities

- Meta-analysis

-

a study that combines raw data from multiple quantitative studies and analyzes the pooled data using statistics

- Meta-synthesis

-

a study that combines primary data from multiple qualitative sources and analyzes the pooled data

- Methodological justification

-

an explanation of why you chose the specific design of your study; why do your chosen methods fit with the aim of your research

- Methodology

-

A description of how research is conducted.

- Micro-level

-

level of interaction or activity that exists at the smallest level, usually among individuals

- Misconduct

-

Usually unintentional. Very broad category that covers things such as not using the proper statistics for analysis, injecting bias into your study and in interpreting results, being careless with your research methodology

- Mixed methods research

-

when researchers use both quantitative and qualitative methods in a project

- Mode

-

The most commonly occurring value of a variable.

- Moderating variable

-

A variable that affects the strength and/or direction of the relationship between the independent and dependent variables.

- Multi-dimensional concepts

-

concepts that are comprised of multiple elements

- Multidimensional scales

-

An empirical structure for measuring items or indicators of the multiple dimensions of a concept.

- Multivariate analysis

-

A group of statistical techniques that examines the relationship between at least three variables

- Mutually exclusive categories

-

Mutually exclusive categories are options for closed ended questions that do not overlap, so people only fit into one category or another, not both.

- Narratives

-

Those stories that we compose as human beings that allow us to make meaning of our experiences and the world around us

- National Research Act

-

US legislation passed In 1974, which created the National Commission for the Protection of Human Subjects in Biomedical and Behavioral Research, which went on to produce The Belmont Report.

- Natural setting

-

collecting data in the field where it naturally/normally occurs

- Naturalistic observation

-

Making qualitative observations that attempt to capture the subjects of the observation as unobtrusively as possible and with limited structure to the observation.

- Negative case analysis

-

Including data that contrasts, contradicts, or challenges the majority of evidence that we have found or expect to find

- Negative correlation

-

occurs when two variables change in opposite directions - one goes up, the other goes down and vice versa

- Negotiated outcomes

-

ensuring that we have correctly captured and reflected an accurate understanding in our findings by clarifying and verifying our findings with our participants

- Neutrality

-

The idea that qualitative researchers attempt to limit or at the very least account for their own biases, motivations, interests and opinions during the research process.

- Nominal

-

The lowest level of measurement; categories cannot be mathematically ranked, though they are exhaustive and mutually exclusive

- Nomothetic causality

-

causal explanations that can be universally applied to groups, such as scientific laws or universal truths

- Nomothetic explanations

-

provides a more general, sweeping explanation that is universally true for all people

- Non-probability sampling

-

sampling approaches for which a person’s likelihood of being selected for membership in the sample is unknown

- Non-relational

-

Referring to data analysis that doesn't examine how variables relate to each other.

- Non-response bias

-

If the majority of the targeted respondents fail to respond to a survey, then a legitimate concern is whether non-respondents are not responding due to a systematic reason, which may raise questions about the validity of the study’s results, especially as this relates to the representativeness of the sample.

- Nonresponse Bias

-

The bias that occurs when those who respond to your request to participate in a study are different from those who do not respond to you request to participate in a study.

- Nonspuriousness

-

an association between two variables that is NOT caused by a third variable

- Null hypothesis

-

the assumption that no relationship exists between the variables in question

- Nuremberg Code

-

The Nuremberg Code is a 10-point set of research principles designed to guide doctors and scientists who conduct research on human subjects, crafted in response to the atrocities committed during the Holocaust.

- Objective truth

-

a single truth, observed without bias, that is universally applicable

- Observation

-

Observation is a tool for data gathering where researchers rely on their own senses (e.g. sight, sound) to gather information on a topic.

- Observational terms

-

In measurement, conditions that are easy to identify and verify through direct observation.

- Observations/cases

-

The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains.

- Observer triangulation

-

including more than one member of your research team to aid in analyzing the data

- Office of Human Research Protections

-

The federal government agency that oversees IRBs.

- One-way ANOVA

-

a statistical procedure to compare the means of a variable across three or more groups

- Ontology

-

assumptions about what is real and true

- Open access

-

journal articles that are made freely available by the publisher

- Open coding

-

An initial phase of coding that involves reviewing the data to determine the preliminary ideas that seem important and potential labels that reflect their significance.

- Open science

-

sharing one's data and methods for the purposes of replication, verifiability, and collaboration of findings

- Open-ended question

-

Questions for which the researcher does not include response options, allowing for respondents to answer the question in their own words

- Operational definition

-

According to the APA Dictionary of Psychology, an operational definition is "a description of something in terms of the operations (procedures, actions, or processes) by which it could be observed and measured. For example, the operational definition of anxiety could be in terms of a test score, withdrawal from a situation, or activation of the sympathetic nervous system. The process of creating an operational definition is known as operationalization."

- Operationalization

-

process by which researchers spell out precisely how a concept will be measured in their study

- Oral histories

-

Oral histories are a type of qualitative research design that offers a detailed accounting of a person's life, some event, or experience. This story(ies) is aimed at answering a specific research question.

- Oral presentation

-

verbal presentation of research findings to a conference audience

- Ordinal

-

Level of measurement that follows nominal level. Has mutually exclusive categories and a hierarchy (rank order), but we cannot calculate a mathematical distance between attributes.

- Outliers

-

Extreme values in your data.

- P-value

-

summarizes the incompatibility between a particular set of data and a proposed model for the data, usually the null hypothesis. The lower the p-value, the more inconsistent the data are with the null hypothesis, indicating that the relationship is statistically significant.

- Panel presentations

-

group presentations that feature experts on a given issue, with time for audience question-and-answer

- Panel survey

-

A type of longitudinal design where the researchers gather data at multiple points in time and the same people participate in the survey each time it is administered.

- Paradigm

-

the overarching lens or framework that guides the theory and methods of a research study

- Participant

-

Those who are asked to contribute data in a research study; sometimes called respondents or subjects.

- Participatory research

-

An approach to research that more intentionally attempts to involve community members throughout the research process compared to more traditional research methods. In addition, participatory approaches often seek some concrete, tangible change for the benefit of the community (often defined by the community).

- Paywall

-

when a publisher prevents access to reading content unless the user pays money

- Peer debriefing

-

A qualitative research tool for enhancing rigor by partnering with a peer researcher who is not connected with your project (therefore more objective), to discuss project details, your decision, perhaps your reflexive journal, as a means of helping to reduce researcher bias and maintain consistency and transparency in the research process.

- Peer review

-

a formal process in which other esteemed researchers and experts ensure your work meets the standards and expectations of the professional field

- Periodicals

-

trade publications, magazines, and newspapers

- Periodicity

-

the tendency for a pattern to occur at regular intervals

- Phenomenology

-

A qualitative research design that aims to capture and describe the lived experience of some event or "phenomenon" for a group of people.

- Photovoice

-

Photovoice is a technique that merges pictures with narrative (word or voice data that helps that interpret the meaning or significance of the visual artifact. It is often used as a tool in CBPR.

- Pilot testing

-

Testing out your research materials in advance on people who are not included as participants in your study.

- Plausibility

-

as a criteria for causal relationship, the relationship must make logical sense and seem possible

- Political case

-

A purposive sampling strategy that focuses on selecting cases that are important in representing a contemporary politicized issue.

- Population

-

the larger group of people you want to be able to make conclusions about based on the conclusions you draw from the people in your sample

- Positionality statement

-

A statement about the researchers worldview and life experiences, specifically in respect to the research topic they are studying. It helps to demonstrate the subjective connection(s) the researcher has to the topic and is a way to encourage transparency in research.

- Positive correlation

-

Occurs when two variables move together in the same direction - as one increases, so does the other, or, as one decreases, so does the other

- Positivism

-

a paradigm guided by the principles of objectivity, knowability, and deductive logic

- Post-test

-

A measure of a participant's condition after an intervention or, if they are part of the control/comparison group, at the end of an experiment.

- Post-test only control group design

-

an experimental design in which participants are randomly assigned to control and treatment groups, one group receives an intervention, and both groups receive only a post-test assessment

- Poster presentation

-

presentations that use a poster to visually represent the elements of the study

- Power

-

the odds you will detect a significant relationship between variables when one is truly present in your sample

- Practical articles

-

describe “how things are done” or comment on pressing issues in practice (Wallace & Wray, 2016, p. 20)

- Practical implications

-

How well your findings can be translated and used in the "real world." For example, you may have a statistically significant correlation; however, the relationship may be very weak. This limits your abiltiy to use these data for real world change.

- Practice effect

-

improvements in cognitive assessments due to exposure to the instrument

- Practice wisdom

-

knowledge gained through “learning by doing” that guides social work intervention and increases over time

- Pragmatism

-

a research paradigm that suspends questions of philosophical ‘truth’ and focuses more on how different philosophies, theories, and methods can be used strategically to resolve a problem or question within the researcher's unique context

- Pre-experimental design

- Predictive research

-

research with the goal of predicting (versus explaining) outcomes as a result of other variables

- Predictive validity

-

A type of criterion validity that examines how well your tool predicts a future criterion.

- Pretest

-

A measure of a participant's condition before they receive an intervention or treatment.

- Pretest and post-test control group design

-

a type of experimental design in which participants are randomly assigned to control and experimental groups, one group receives an intervention, and both groups receive pre- and post-test assessments

- prevalence

-

the rate of a characteristic, condition, etc. in a population at a specific time point or time period

- Primary data

-

Data you have collected yourself.

- Primary source

-

in a literature review, a source that describes primary data collected and analyzed by the author, rather than only reviewing what other researchers have found

- Principle of replication

-

This means that one scientist could repeat another’s study with relative ease. By replicating a study, we may become more (or less) confident in the original study’s findings.

- Probability proportionate to size sampling

-

a type of cluster sampling, in which clusters are given different chances of being selected based on their size so that each element across all of the clusters has an equal chance of being selected

- Probability sampling

-

sampling approaches for which a person’s likelihood of being selected from the sampling frame is known

- Probes

-

Probes a brief prompts or follow up questions that are used in qualitative interviewing to help draw out additional information on a particular question or idea.

- Process evaluation

-

An analysis of how well a program runs

- Professional development

-

the "uptake of formal and informal learning opportunities that deepen and extend...professional competence, including knowledge, beliefs, motivation, and self-regulatory skills" (Richter, Kunter, Klusmann, Lüdtke, & Baumert, 2014)

- Program evaluation

-

The systematic process by which we determine if social programs are meeting their goals, how well the program runs, whether the program had the desired effect, and whether the program has merit according to stakeholders (including in terms of the monetary costs and benefits)

- Prolonged engagement

-

As researchers, this means we are extensively spending time with participants or are in the community we are studying.

- Prospective survey design

-

In prospective studies, individuals are followed over time and data about them is collected as their characteristics or circumstances change.

- Proxy

-

a person who completes a survey on behalf of another person

- Pseudonyms

-

Fake names assigned in research to protect the identity of participants.

- Pseudoscience

-

claims about the world that appear scientific but are incompatible with the values and practices of science

- Psychometrics

-

The science of measurement. Involves using theory to assess measurement procedures and tools.

- Public approach

-

approach to recruitment where participants are sought in public spaces

- Purposive

-

In a purposive sample, participants are intentionally or hand-selected because of their specific expertise or experience.

- Qualitative data

-

data derived from analysis of texts. Usually, this is word data (like a conversation or journal entry) but can also include performances, pictures, and other means of expressing ideas.

- Qualitative methods

-

qualitative methods interpret language and behavior to understand the world from the perspectives of other people

- Qualitative research

-

Research that involves the use of data that represents human expression through words, pictures, movies, performance and other artifacts.

- Quantitative data

-

numerical data

- Quantitative interview

-

when a researcher administers a questionnaire verbally to participants

- Quantitative methods

-

quantitative methods examine numerical data to precisely describe and predict elements of the social world

- Quasi-experimental designs

-

a subtype of experimental design that is similar to a true experiment, but does not have randomly assigned control and treatment groups

- Queer(ing) methodologies

-

Research methods using this approach aim to question, challenge and/or reject knowledge that is commonly accepted and privileged in society and elevate and empower knowledge and perspectives that are often perceived as non-normative.

- Query

-

search terms used in a database to find sources of information, like articles or webpages

- Questionnaire

-

A research instrument consisting of a set of questions (items) intended to capture responses from participants in a standardized manner

- Quota

-

A quota sample involves the researcher identifying a subgroups within a population that they want to make sure to include in their sample, and then identifies a quota or target number to recruit that represent each of these subgroups.

- Random assignment

-

using a random process to decide which participants are tested in which conditions

- Random error

-

Unpredictable error that does not result in scores that are consistently higher or lower on a given measure but are nevertheless inaccurate.

- Random errors

-

Errors lack any perceptable pattern.

- Randomized controlled trial

-

an experiment that involves random assignment to a control and experimental group to evaluate the impact of an intervention or stimulus

- Randomly selection

-

An approach to sampling where all elements or people in a sampling frame have an equal chance of being selected for inclusion in a study's sample.

- Range

-

The difference between the highest and lowest scores in the distribution.

- Rating scale

-

An ordered set of responses that participants must choose from.

- Ratio

-

The highest level of measurement. Denoted by mutually exclusive categories, a hierarchy (order), values can be added, subtracted, multiplied, and divided, and the presence of an absolute zero.

- Raw data

-

unprocessed data that researchers can analyze using quantitative and qualitative methods (e.g., responses to a survey or interview transcripts)

- Recall bias

-

When respondents have difficult providing accurate answers to questions due to the passage of time.

- Reciprocal determinism

-

Concept advanced by Albert Bandura that human behavior both shapes and is shaped by their environment.

- Reconstruction

-

The act of putting the deconstructed qualitative back together during the analysis process in the search for meaning and ultimately the results of the study.

- Recruitment

-

the process by which the researcher informs potential participants about the study and attempts to get them to participate

- Reflexive journal

-

A research journal that helps the researcher to reflect on and consider their thoughts and reactions to the research process and how it may be shaping the study

- Reflexivity

-

How we understand and account for our influence, as researchers, on the research process.

- Reification

-

the process of considering something abstract to be a concrete object or thing; the fallacy of reification is assuming that abstract concepts exist in some concrete, tangible way

- Reliability

-

The degree to which an instrument reflects the true score rather than error. In statistical terms, reliability is the portion of observed variability in the sample that is accounted for by the true variability, not by error. Note: Reliability is necessary, but not sufficient, for measurement validity.

- Representative sample

-

a sample that looks like the population from which it was selected in all respects that are potentially relevant to the study

- Representativeness

-

How closely your sample resembles the population from which it was drawn.

- Research

-

a systematic investigation, including development, testing, and. evaluation, designed to develop or contribute to generalizable knowledge

- Research data repository

-

These are sites where contributing researchers can house data that other researchers can view and request permission to use

- Research methods

-

the methods researchers use to examine empirical data

- Research paradigm

-

a set of common philosophical (ontological, epistemological, and axiological) assumptions that inform research (e.g., Post-positivism, Constructivism, Pragmatic, Critical)

- Research proposal

-

a document produced by researchers that reviews the literature relevant to their topic and describes the methods they will use to conduct their study

- Research protocol

-

The details/steps outlining how a study will be carried out.

- research questions

-

the questions a research study is designed to answer

- Researcher bias

-

The unintended influence that the researcher may have on the research process.

- Respect for persons

-

One of the three ethical principles espoused in the Belmont Report. Treating people as autonomous beings who have the right to make their own decisions. Acknowledging participants' personal dignity.

- Response option

-

the answers researchers provide to participants to choose from when completing a questionnaire

- Retrospective survey

-

Similar to other longitudinal studies, these surveys deal with changes over time, but like a cross-sectional study, they are administered only once. In a retrospective survey, participants are asked to report events from the past.

- Review articles

-

journal articles that summarize the findings other researchers and establish the state of the literature in a given topic area

- Rigor

-

Rigor is the process through which we demonstrate, to the best of our ability, that our research is empirically sound and reflects a scientific approach to knowledge building.

- Roundtable presentations

-

facilitated discussions on a topic, often to generate new ideas

- Sample

-

the group of people you successfully recruit from your sampling frame to participate in your study

- Sample size

-

The number of cases found in your final sample.

- Sampling bias

-

Sampling bias is present when our sampling process results in a sample that does not represent our population in some way.

- Sampling distribution

-

the set of all possible samples you could possibly draw for your study

- Sampling error

-

The difference in the statistical characteristics of the population (i.e., the population parameters) and those in the sample (i.e., the sample statistics); the error caused by observing characteristics of a sample rather than the entire population

- Sampling frame

-

the list of people from which a researcher will draw her sample

- Sampling interval

-

used in systematic random sampling; the distance between the elements in the sampling frame selected for the sample; determined by dividing the total sampling frame by the desired sample size

- Saturation

-

The point where gathering more data doesn't offer any new ideas or perspectives on the issue you are studying. Reaching saturation is an indication that we can stop qualitative data collection.

- Scatter plot

-

A graphical representation of data where the y-axis (the vertical one along the side) is your variable's value and the x-axis (the horizontal one along the bottom) represents the individual instance in your data.

- Scatterplots

-

Visual representations of the relationship between two interval/ratio variables that usually use dots to represent data points

- Science

-

a way of knowing that attempts to systematically collect and categorize facts or truths

- Secondary data

-

Data someone else has collected that you have permission to use in your research.

- Secondary data analysis

-

analyzing data that has been collected by another person or research group

- Secondary sources

-

interpret, discuss, and summarize primary sources

- Selection bias

-

the degree to which people in my sample differs from the overall population

- Selective or theoretical coding

-